Для начала создаём namespase elk

kubectl create namespace elk

ну или

cat namespace.yml

kind: Namespace

apiVersion: v1

metadata:

name: elkkubectl apply -f namespace.yml

выкачиваем чарт

git clone https://github.com/elastic/helm-charts.git

cd helm-charts/

git checkout 7.9

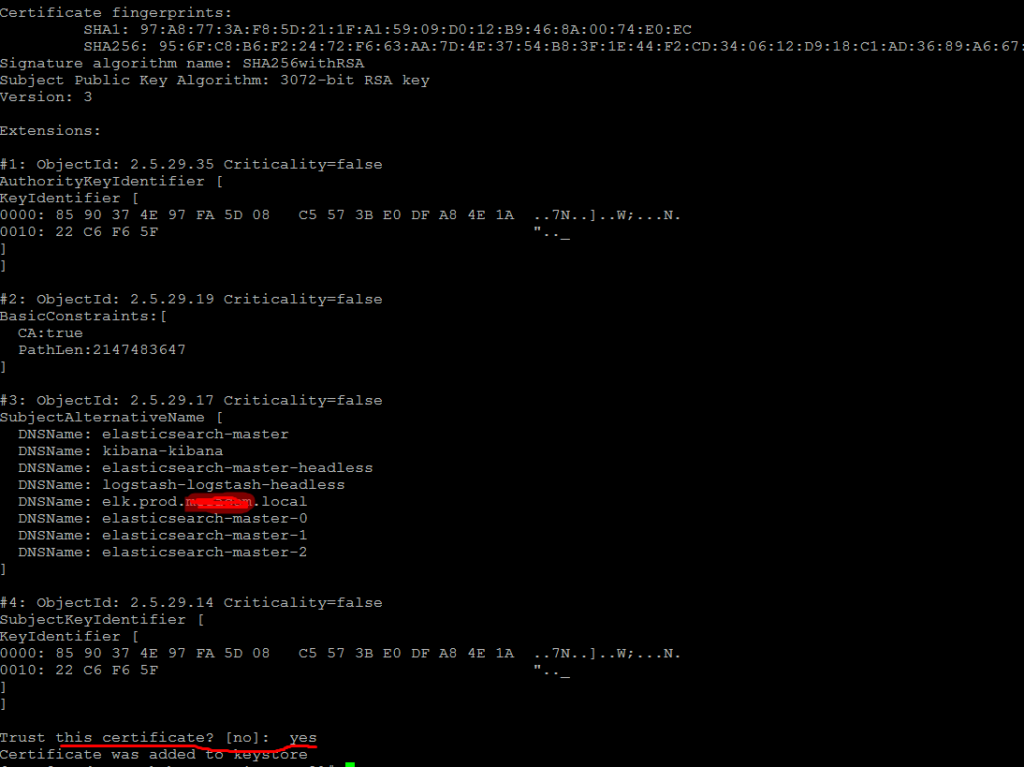

Далее нам надо сгенерировать сертификаты на основе которых будет всё работать.

[root@prod-vsrv-kubemaster1 helm-charts]# mkdir mkdir /certs

[root@prod-vsrv-kubemaster1 helm-charts]# cd /certs/

Создаём файл в котором укажем наши доменные имена (logstash требователен к наличию домена в сертификате)

cat cnf

[req]

distinguished_name = req_distinguished_name

x509_extensions = v3_req

prompt = no

[req_distinguished_name]

C = KG

ST = Bishkek

L = Bishkek

O = test

CN = elasticsearch-master

[v3_req]

subjectKeyIdentifier = hash

authorityKeyIdentifier = keyid,issuer

basicConstraints = CA:TRUE

subjectAltName = @alt_names

[alt_names]

DNS.1 = elasticsearch-master

DNS.2 = kibana-kibana

DNS.3 = elasticsearch-master-headless

DNS.4 = logstash-logstash-headless

DNS.5 = elk.prod.test.local

DNS.6 = elasticsearch-master-0

DNS.7 = elasticsearch-master-1

DNS.8 = elasticsearch-master-2

openssl genpkey -aes-256-cbc -pass pass:123456789 -algorithm RSA -out mysite.key -pkeyopt rsa_keygen_bits:3072

Тут надо будет ввести пароль при генерации а дальше заполнить данные сертификата.

Я везде задал пароль 123456789

openssl req -new -x509 -key mysite.key -sha256 -config cnf -out mysite.crt -days 7300

Enter pass phrase for mysite.key:

создаём сертификат p12 который нужен elastic

openssl pkcs12 -export -in mysite.crt -inkey mysite.key -out identity.p12 -name «mykey»

Enter pass phrase for mysite.key: вот тут вводим наш пароль 123456789

Enter Export Password: ТУТ ОСТАВЛЯЕМ БЕЗ ПАРОЛЯ

Verifying — Enter Export Password: ТУТ ОСТАВЛЯЕМ БЕЗ ПАРОЛЯ

docker run —rm -v /certs:/certs -it openjdk:oracle keytool -import -file /certs/mysite.crt -keystore /certs/trust.jks -storepass 123456789

появится сообщение в котором мы соглашаемся, что доверяем сертификату

Вытаскиваем приватный ключ чтоб он у нас был без пароля

openssl rsa -in mysite.key -out mysite-without-pass.key

Enter pass phrase for mysite.key:

writing RSA key

Всё готово, все нужные сертификаты для elasticsearch сгенерированы:

[root@prod-vsrv-kubemaster1 certs]# ll

total 32

-rw-r—r— 1 root root 575 Feb 10 10:15 cnf

-rw-r—r— 1 root root 3624 Feb 10 10:15 identity.p12

-rw-r—r— 1 root root 1935 Feb 10 10:15 mysite.crt

-rw-r—r— 1 root root 2638 Feb 10 10:15 mysite.key

-rw-r—r— 1 root root 2459 Feb 10 10:39 mysite-without-pass.key

-rw-r—r— 1 root root 1682 Feb 10 10:15 trust.jks

Создаём секрет с сертификатами

[root@prod-vsrv-kubemaster1 certs]# kubectl create secret generic elastic-certificates -n elk —from-file=identity.p12 —from-file=mysite.crt —from-file=mysite.key —from-file=mysite-without-pass.key —from-file=trust.jks

Создаём секрет с логином и паролем для elastic:

kubectl create secret generic secret-basic-auth -n elk —from-literal=password=elastic —from-literal=username=elastic

отметим что у нас в кластере уже установлен nfs-provisioner

Перейдём к настройке переменных у elastic

elasticsearch/values.yaml

Тут включаем xpack добавляем сертификаты и указываем директорию для снапшотов

esConfig:

elasticsearch.yml: |

path.repo: /snapshot

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.security.transport.ssl.verification_mode: certificate

xpack.security.transport.ssl.keystore.path: /usr/share/elasticsearch/config/certs/identity.p12

xpack.security.transport.ssl.truststore.path: /usr/share/elasticsearch/config/certs/identity.p12

xpack.security.http.ssl.enabled: true

xpack.security.http.ssl.truststore.path: /usr/share/elasticsearch/config/certs/identity.p12

xpack.security.http.ssl.keystore.path: /usr/share/elasticsearch/config/certs/identity.p12

path.repo: /snapshot — это наша директория для снапшотов, они будут сохраняться в отдельном volume

также добавляем в переменные наш логин и пароль что мы указали при создании секрета

extraEnvs:

- name: ELASTIC_PASSWORD

valueFrom:

secretKeyRef:

name: secret-basic-auth

key: password

- name: ELASTIC_USERNAME

valueFrom:

secretKeyRef:

name: secret-basic-auth

key: username

также подключаем директорию для сертификатов

secretMounts:

- name: elastic-certificates

secretName: elastic-certificates

path: /usr/share/elasticsearch/config/certs

также указываем наш nfs провижинер

volumeClaimTemplate:

accessModes: [ "ReadWriteOnce" ]

storageClassName: nfs-storageclass

resources:

requests:

storage: 3Giтакже выставляем antiAffinity soft (так как у нас 2 воркера а эластик запускается в 3х подах он не может стартануть) эта настройка говорит что на одной ноде могут быть запущены 2 пода из кластера уэластика.

antiAffinity: «soft»

также правим протокол на https

protocol: https

весь файл будет иметь следующий вид:

[root@prod-vsrv-kubemaster1 helm-charts]# cat elasticsearch/values.yaml

---

clusterName: "elasticsearch"

nodeGroup: "master"

# The service that non master groups will try to connect to when joining the cluster

# This should be set to clusterName + "-" + nodeGroup for your master group

masterService: ""

# Elasticsearch roles that will be applied to this nodeGroup

# These will be set as environment variables. E.g. node.master=true

roles:

master: "true"

ingest: "true"

data: "true"

remote_cluster_client: "true"

# ml: "true" # ml is not availble with elasticsearch-oss

replicas: 3

minimumMasterNodes: 2

esMajorVersion: ""

# Allows you to add any config files in /usr/share/elasticsearch/config/

# such as elasticsearch.yml and log4j2.properties

esConfig:

elasticsearch.yml: |

path.repo: /snapshot

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.security.transport.ssl.verification_mode: certificate

xpack.security.transport.ssl.keystore.path: /usr/share/elasticsearch/config/certs/identity.p12

xpack.security.transport.ssl.truststore.path: /usr/share/elasticsearch/config/certs/identity.p12

xpack.security.http.ssl.enabled: true

xpack.security.http.ssl.truststore.path: /usr/share/elasticsearch/config/certs/identity.p12

xpack.security.http.ssl.keystore.path: /usr/share/elasticsearch/config/certs/identity.p12

# key:

# nestedkey: value

# log4j2.properties: |

# key = value

# Extra environment variables to append to this nodeGroup

# This will be appended to the current 'env:' key. You can use any of the kubernetes env

# syntax here

extraEnvs:

- name: ELASTIC_PASSWORD

valueFrom:

secretKeyRef:

name: secret-basic-auth

key: password

- name: ELASTIC_USERNAME

valueFrom:

secretKeyRef:

name: secret-basic-auth

key: username

# - name: MY_ENVIRONMENT_VAR

# value: the_value_goes_here

# Allows you to load environment variables from kubernetes secret or config map

envFrom: []

# - secretRef:

# name: env-secret

# - configMapRef:

# name: config-map

# A list of secrets and their paths to mount inside the pod

# This is useful for mounting certificates for security and for mounting

# the X-Pack license

secretMounts:

- name: elastic-certificates

secretName: elastic-certificates

path: /usr/share/elasticsearch/config/certs

# defaultMode: 0755

image: "docker.elastic.co/elasticsearch/elasticsearch"

imageTag: "7.9.4-SNAPSHOT"

imagePullPolicy: "IfNotPresent"

podAnnotations: {}

# iam.amazonaws.com/role: es-cluster

# additionals labels

labels: {}

esJavaOpts: "-Xmx1g -Xms1g"

resources:

requests:

cpu: "1000m"

memory: "2Gi"

limits:

cpu: "1000m"

memory: "2Gi"

initResources: {}

# limits:

# cpu: "25m"

# # memory: "128Mi"

# requests:

# cpu: "25m"

# memory: "128Mi"

sidecarResources: {}

# limits:

# cpu: "25m"

# # memory: "128Mi"

# requests:

# cpu: "25m"

# memory: "128Mi"

networkHost: "0.0.0.0"

volumeClaimTemplate:

accessModes: [ "ReadWriteOnce" ]

storageClassName: nfs-storageclass

resources:

requests:

storage: 3Gi

rbac:

create: false

serviceAccountAnnotations: {}

serviceAccountName: ""

podSecurityPolicy:

create: false

name: ""

spec:

privileged: true

fsGroup:

rule: RunAsAny

runAsUser:

rule: RunAsAny

seLinux:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

volumes:

- secret

- configMap

- persistentVolumeClaim

persistence:

enabled: true

labels:

# Add default labels for the volumeClaimTemplate fo the StatefulSet

enabled: false

annotations: {}

extraVolumes: []

# - name: extras

# emptyDir: {}

extraVolumeMounts: []

# - name: extras

# mountPath: /usr/share/extras

# readOnly: true

extraContainers: []

# - name: do-something

# image: busybox

# command: ['do', 'something']

extraInitContainers: []

# - name: do-something

# image: busybox

# command: ['do', 'something']

# This is the PriorityClass settings as defined in

# https://kubernetes.io/docs/concepts/configuration/pod-priority-preemption/#priorityclass

priorityClassName: ""

# By default this will make sure two pods don't end up on the same node

# Changing this to a region would allow you to spread pods across regions

antiAffinityTopologyKey: "kubernetes.io/hostname"

# Hard means that by default pods will only be scheduled if there are enough nodes for them

# and that they will never end up on the same node. Setting this to soft will do this "best effort"

antiAffinity: "soft"

# This is the node affinity settings as defined in

# https://kubernetes.io/docs/concepts/configuration/assign-pod-node/#node-affinity-beta-feature

nodeAffinity: {}

# The default is to deploy all pods serially. By setting this to parallel all pods are started at

# the same time when bootstrapping the cluster

podManagementPolicy: "Parallel"

# The environment variables injected by service links are not used, but can lead to slow Elasticsearch boot times when

# there are many services in the current namespace.

# If you experience slow pod startups you probably want to set this to `false`.

enableServiceLinks: true

protocol: https

httpPort: 9200

transportPort: 9300

service:

labels: {}

labelsHeadless: {}

type: ClusterIP

nodePort: ""

annotations: {}

httpPortName: http

transportPortName: transport

loadBalancerIP: ""

loadBalancerSourceRanges: []

externalTrafficPolicy: ""

updateStrategy: RollingUpdate

# This is the max unavailable setting for the pod disruption budget

# The default value of 1 will make sure that kubernetes won't allow more than 1

# of your pods to be unavailable during maintenance

maxUnavailable: 1

podSecurityContext:

fsGroup: 1000

runAsUser: 1000

securityContext:

capabilities:

drop:

- ALL

# readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

# How long to wait for elasticsearch to stop gracefully

terminationGracePeriod: 120

sysctlVmMaxMapCount: 262144

readinessProbe:

failureThreshold: 3

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 3

timeoutSeconds: 5

# https://www.elastic.co/guide/en/elasticsearch/reference/7.9/cluster-health.html#request-params wait_for_status

clusterHealthCheckParams: "wait_for_status=green&timeout=1s"

## Use an alternate scheduler.

## ref: https://kubernetes.io/docs/tasks/administer-cluster/configure-multiple-schedulers/

##

schedulerName: ""

imagePullSecrets: []

nodeSelector: {}

tolerations: []

# Enabling this will publically expose your Elasticsearch instance.

# Only enable this if you have security enabled on your cluster

ingress:

enabled: false

annotations: {}

# kubernetes.io/ingress.class: nginx

# kubernetes.io/tls-acme: "true"

path: /

hosts:

- chart-example.local

tls: []

# - secretName: chart-example-tls

# hosts:

# - chart-example.local

nameOverride: ""

fullnameOverride: ""

# https://github.com/elastic/helm-charts/issues/63

masterTerminationFix: false

lifecycle: {}

# preStop:

# exec:

# command: ["/bin/sh", "-c", "echo Hello from the postStart handler > /usr/share/message"]

# postStart:

# exec:

# command:

# - bash

# - -c

# - |

# #!/bin/bash

# # Add a template to adjust number of shards/replicas

# TEMPLATE_NAME=my_template

# INDEX_PATTERN="logstash-*"

# SHARD_COUNT=8

# REPLICA_COUNT=1

# ES_URL=http://localhost:9200

# while [[ "$(curl -s -o /dev/null -w '%{http_code}\n' $ES_URL)" != "200" ]]; do sleep 1; done

# curl -XPUT "$ES_URL/_template/$TEMPLATE_NAME" -H 'Content-Type: application/json' -d'{"index_patterns":['\""$INDEX_PATTERN"\"'],"settings":{"number_of_shards":'$SHARD_COUNT',"number_of_replicas":'$REPLICA_COUNT'}}'

sysctlInitContainer:

enabled: true

keystore: []

# Deprecated

# please use the above podSecurityContext.fsGroup instead

fsGroup: ""

========================================================================================

перейдём к настройке kibana:

kibana/values.yaml

Правим http на https

elasticsearchHosts: «https://elasticsearch-master:9200»

также

extraEnvs:

- name: 'ELASTICSEARCH_USERNAME'

valueFrom:

secretKeyRef:

name: secret-basic-auth

key: username

- name: 'ELASTICSEARCH_PASSWORD'

valueFrom:

secretKeyRef:

name: secret-basic-auth

key: password

также подключаем директорию с сертификатами

secretMounts:

- name: elastic-certificates

secretName: elastic-certificates

path: /usr/share/kibana/config/certs

также добавляем в основной конфиг пути до сертификатов

kibanaConfig:

kibana.yml: |

server.ssl:

enabled: true

key: /usr/share/kibana/config/certs/mysite-without-pass.key

certificate: /usr/share/kibana/config/certs/mysite.crt

xpack.security.encryptionKey: "something_at_least_32_characters"

elasticsearch.ssl:

certificateAuthorities: /usr/share/kibana/config/certs/mysite.crt

verificationMode: certificate

также настраиваем ingress чтобы по нашему домену открывалась кибана, отметим что строка:

nginx.ingress.kubernetes.io/backend-protocol: «HTTPS»

обязательна так как без неё ингрес по умолчанию проксирует всё на HTTP

ingress:

enabled: true

annotations:

# kubernetes.io/ingress.class: nginx

# kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

path: /

hosts:

- elk.prod.test.local

весь файл будет иметь вид:

cat kibana/values.yaml

---

elasticsearchHosts: "https://elasticsearch-master:9200"

replicas: 1

# Extra environment variables to append to this nodeGroup

# This will be appended to the current 'env:' key. You can use any of the kubernetes env

# syntax here

extraEnvs:

- name: 'ELASTICSEARCH_USERNAME'

valueFrom:

secretKeyRef:

name: secret-basic-auth

key: username

- name: 'ELASTICSEARCH_PASSWORD'

valueFrom:

secretKeyRef:

name: secret-basic-auth

key: password

# - name: "NODE_OPTIONS"

# value: "--max-old-space-size=1800"

# - name: MY_ENVIRONMENT_VAR

# value: the_value_goes_here

# Allows you to load environment variables from kubernetes secret or config map

envFrom: []

# - secretRef:

# name: env-secret

# - configMapRef:

# name: config-map

# A list of secrets and their paths to mount inside the pod

# This is useful for mounting certificates for security and for mounting

# the X-Pack license

secretMounts:

- name: elastic-certificates

secretName: elastic-certificates

path: /usr/share/kibana/config/certs

# - name: kibana-keystore

# secretName: kibana-keystore

# path: /usr/share/kibana/data/kibana.keystore

# subPath: kibana.keystore # optional

image: "docker.elastic.co/kibana/kibana"

imageTag: "7.9.4-SNAPSHOT"

imagePullPolicy: "IfNotPresent"

# additionals labels

labels: {}

podAnnotations: {}

# iam.amazonaws.com/role: es-cluster

resources:

requests:

cpu: "1000m"

memory: "2Gi"

limits:

cpu: "1000m"

memory: "2Gi"

protocol: https

serverHost: "0.0.0.0"

healthCheckPath: "/app/kibana"

# Allows you to add any config files in /usr/share/kibana/config/

# such as kibana.yml

kibanaConfig:

kibana.yml: |

server.ssl:

enabled: true

key: /usr/share/kibana/config/certs/mysite-without-pass.key

certificate: /usr/share/kibana/config/certs/mysite.crt

xpack.security.encryptionKey: "something_at_least_32_characters"

elasticsearch.ssl:

certificateAuthorities: /usr/share/kibana/config/certs/mysite.crt

verificationMode: certificate

# kibana.yml: |

# key:

# nestedkey: value

# If Pod Security Policy in use it may be required to specify security context as well as service account

podSecurityContext:

fsGroup: 1000

securityContext:

capabilities:

drop:

- ALL

# readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

serviceAccount: ""

# This is the PriorityClass settings as defined in

# https://kubernetes.io/docs/concepts/configuration/pod-priority-preemption/#priorityclass

priorityClassName: ""

httpPort: 5601

extraContainers: ""

# - name: dummy-init

# image: busybox

# command: ['echo', 'hey']

extraInitContainers: ""

# - name: dummy-init

# image: busybox

# command: ['echo', 'hey']

updateStrategy:

type: "Recreate"

service:

type: ClusterIP

loadBalancerIP: ""

port: 5601

nodePort: ""

labels: {}

annotations: {}

# cloud.google.com/load-balancer-type: "Internal"

# service.beta.kubernetes.io/aws-load-balancer-internal: 0.0.0.0/0

# service.beta.kubernetes.io/azure-load-balancer-internal: "true"

# service.beta.kubernetes.io/openstack-internal-load-balancer: "true"

# service.beta.kubernetes.io/cce-load-balancer-internal-vpc: "true"

loadBalancerSourceRanges: []

# 0.0.0.0/0

ingress:

enabled: true

annotations:

# kubernetes.io/ingress.class: nginx

# kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

path: /

hosts:

- elk.prod.test.local

tls: []

# - secretName: chart-example-tls

# hosts:

# - chart-example.local

readinessProbe:

failureThreshold: 3

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 3

timeoutSeconds: 5

imagePullSecrets: []

nodeSelector: {}

tolerations: []

affinity: {}

nameOverride: ""

fullnameOverride: ""

lifecycle: {}

# preStop:

# exec:

# command: ["/bin/sh", "-c", "echo Hello from the postStart handler > /usr/share/message"]

# postStart:

# exec:

# command: ["/bin/sh", "-c", "echo Hello from the postStart handler > /usr/share/message"]

# Deprecated - use only with versions < 6.6

elasticsearchURL: "" # "http://elasticsearch-master:9200"

==========================================================================================

перейдём к настройке logstash

logstash/values.yaml

включаем xpack

logstashConfig:

logstash.yml: |

http.host: 0.0.0.0

xpack.monitoring.enabled: true

xpack.monitoring.elasticsearch.username: '${ELASTICSEARCH_USERNAME}'

xpack.monitoring.elasticsearch.password: '${ELASTICSEARCH_PASSWORD}'

xpack.monitoring.elasticsearch.hosts: [ "https://elasticsearch-master:9200" ]

xpack.monitoring.elasticsearch.ssl.certificate_authority: /usr/share/logstash/config/certs/mysite.crt

также настраиваем приём на порт 5045 так как 5044 поднимается автоматически и если оставить input с 5044 то будет конфликт портов, настраиваем также фильтр по неймспейсу если приходят логи из неймспейса terminal-soft мы к ним добавляем тэг, убираем пару лишних полей и отправляем в ластик, указывая имя индекса имя ilm политики -которая должна быть предварительно создана.

logstashPipeline:

logstash.conf: |

input {

exec { command => "uptime" interval => 30 }

beats {

port => 5045

}

}

filter {

if [kubernetes][namespace] == "terminal-soft" {

mutate {

add_tag => "tag-terminal-soft"

remove_field => ["[agent][name]","[agent][version]","[host][mac]","[host][ip]"] }

}

}

output {

if "tag-terminal-soft" in [tags] {

elasticsearch {

hosts => [ "https://elasticsearch-master:9200" ]

cacert => "/usr/share/logstash/config/certs/mysite.crt"

manage_template => false

index => "terminal-soft-%{+YYYY.MM.dd}"

ilm_rollover_alias => "terminal-soft"

ilm_policy => "terminal-soft"

user => '${ELASTICSEARCH_USERNAME}'

password => '${ELASTICSEARCH_PASSWORD}'

}

}

}

также указываем логин пароль с которым будем подключаться к elasticearch

extraEnvs:

- name: 'ELASTICSEARCH_USERNAME'

valueFrom:

secretKeyRef:

name: secret-basic-auth

key: username

- name: 'ELASTICSEARCH_PASSWORD'

valueFrom:

secretKeyRef:

name: secret-basic-auth

key: password

также

secretMounts:

- name: elastic-certificates

secretName: elastic-certificates

path: /usr/share/logstash/config/certs

также

antiAffinity: «soft»

также раскоментим сервис и укажем в нём порт куда всё пересылать 5045 (его мы задали выше в input)

service:

annotations: {}

type: ClusterIP

ports:

- name: beats

port: 5044

protocol: TCP

targetPort: 5045

весь файл имеет вид:

[root@prod-vsrv-kubemaster1 helm-charts]# cat logstash/values.yaml

---

replicas: 1

# Allows you to add any config files in /usr/share/logstash/config/

# such as logstash.yml and log4j2.properties

#

# Note that when overriding logstash.yml, `http.host: 0.0.0.0` should always be included

# to make default probes work.

logstashConfig:

logstash.yml: |

http.host: 0.0.0.0

xpack.monitoring.enabled: true

xpack.monitoring.elasticsearch.username: '${ELASTICSEARCH_USERNAME}'

xpack.monitoring.elasticsearch.password: '${ELASTICSEARCH_PASSWORD}'

xpack.monitoring.elasticsearch.hosts: [ "https://elasticsearch-master:9200" ]

xpack.monitoring.elasticsearch.ssl.certificate_authority: /usr/share/logstash/config/certs/mysite.crt

# key:

# nestedkey: value

# log4j2.properties: |

# key = value

# Allows you to add any pipeline files in /usr/share/logstash/pipeline/

### ***warn*** there is a hardcoded logstash.conf in the image, override it first

logstashPipeline:

logstash.conf: |

input {

exec { command => "uptime" interval => 30 }

beats {

port => 5045

}

}

filter {

if [kubernetes][namespace] == "terminal-soft" {

mutate {

add_tag => "tag-terminal-soft"

remove_field => ["[agent][name]","[agent][version]","[host][mac]","[host][ip]"] }

}

}

output {

if "tag-terminal-soft" in [tags] {

elasticsearch {

hosts => [ "https://elasticsearch-master:9200" ]

cacert => "/usr/share/logstash/config/certs/mysite.crt"

manage_template => false

index => "terminal-soft-%{+YYYY.MM.dd}"

ilm_rollover_alias => "terminal-soft"

ilm_policy => "terminal-soft"

user => '${ELASTICSEARCH_USERNAME}'

password => '${ELASTICSEARCH_PASSWORD}'

}

}

}

# input {

# exec {

# command => "uptime"

# interval => 30

# }

# }

# output { stdout { } }

# Extra environment variables to append to this nodeGroup

# This will be appended to the current 'env:' key. You can use any of the kubernetes env

# syntax here

extraEnvs:

- name: 'ELASTICSEARCH_USERNAME'

valueFrom:

secretKeyRef:

name: secret-basic-auth

key: username

- name: 'ELASTICSEARCH_PASSWORD'

valueFrom:

secretKeyRef:

name: secret-basic-auth

key: password

# - name: MY_ENVIRONMENT_VAR

# value: the_value_goes_here

# Allows you to load environment variables from kubernetes secret or config map

envFrom: []

# - secretRef:

# name: env-secret

# - configMapRef:

# name: config-map

# Add sensitive data to k8s secrets

secrets: []

# - name: "env"

# value:

# ELASTICSEARCH_PASSWORD: "LS1CRUdJTiBgUFJJVkFURSB"

# api_key: ui2CsdUadTiBasRJRkl9tvNnw

# - name: "tls"

# value:

# ca.crt: |

# LS0tLS1CRUdJT0K

# LS0tLS1CRUdJT0K

# LS0tLS1CRUdJT0K

# LS0tLS1CRUdJT0K

# cert.crt: "LS0tLS1CRUdJTiBlRJRklDQVRFLS0tLS0K"

# cert.key.filepath: "secrets.crt" # The path to file should be relative to the `values.yaml` file.

# A list of secrets and their paths to mount inside the pod

secretMounts:

- name: elastic-certificates

secretName: elastic-certificates

path: /usr/share/logstash/config/certs

image: "docker.elastic.co/logstash/logstash"

imageTag: "7.9.4-SNAPSHOT"

imagePullPolicy: "IfNotPresent"

imagePullSecrets: []

podAnnotations: {}

# additionals labels

labels: {}

logstashJavaOpts: "-Xmx1g -Xms1g"

resources:

requests:

cpu: "100m"

memory: "1536Mi"

limits:

cpu: "1000m"

memory: "1536Mi"

volumeClaimTemplate:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 1Gi

rbac:

create: false

serviceAccountAnnotations: {}

serviceAccountName: ""

podSecurityPolicy:

create: false

name: ""

spec:

privileged: true

fsGroup:

rule: RunAsAny

runAsUser:

rule: RunAsAny

seLinux:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

volumes:

- secret

- configMap

- persistentVolumeClaim

persistence:

enabled: false

annotations: {}

extraVolumes: ""

# - name: extras

# emptyDir: {}

extraVolumeMounts: ""

# - name: extras

# mountPath: /usr/share/extras

# readOnly: true

extraContainers: ""

# - name: do-something

# image: busybox

# command: ['do', 'something']

extraInitContainers: ""

# - name: do-something

# image: busybox

# command: ['do', 'something']

# This is the PriorityClass settings as defined in

# https://kubernetes.io/docs/concepts/configuration/pod-priority-preemption/#priorityclass

priorityClassName: ""

# By default this will make sure two pods don't end up on the same node

# Changing this to a region would allow you to spread pods across regions

antiAffinityTopologyKey: "kubernetes.io/hostname"

# Hard means that by default pods will only be scheduled if there are enough nodes for them

# and that they will never end up on the same node. Setting this to soft will do this "best effort"

antiAffinity: "soft"

# This is the node affinity settings as defined in

# https://kubernetes.io/docs/concepts/configuration/assign-pod-node/#node-affinity-beta-feature

nodeAffinity: {}

# The default is to deploy all pods serially. By setting this to parallel all pods are started at

# the same time when bootstrapping the cluster

podManagementPolicy: "Parallel"

httpPort: 9600

# Custom ports to add to logstash

extraPorts: []

# - name: beats

# containerPort: 5044

updateStrategy: RollingUpdate

# This is the max unavailable setting for the pod disruption budget

# The default value of 1 will make sure that kubernetes won't allow more than 1

# of your pods to be unavailable during maintenance

maxUnavailable: 1

podSecurityContext:

fsGroup: 1000

runAsUser: 1000

securityContext:

capabilities:

drop:

- ALL

# readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

# How long to wait for logstash to stop gracefully

terminationGracePeriod: 120

# Probes

# Default probes are using `httpGet` which requires that `http.host: 0.0.0.0` is part of

# `logstash.yml`. If needed probes can be disabled or overrided using the following syntaxes:

#

# disable livenessProbe

# livenessProbe: null

#

# replace httpGet default readinessProbe by some exec probe

# readinessProbe:

# httpGet: null

# exec:

# command:

# - curl

# - localhost:9600

livenessProbe:

httpGet:

path: /

port: http

initialDelaySeconds: 300

periodSeconds: 10

timeoutSeconds: 5

failureThreshold: 3

successThreshold: 1

readinessProbe:

httpGet:

path: /

port: http

initialDelaySeconds: 60

periodSeconds: 10

timeoutSeconds: 5

failureThreshold: 3

successThreshold: 3

## Use an alternate scheduler.

## ref: https://kubernetes.io/docs/tasks/administer-cluster/configure-multiple-schedulers/

##

schedulerName: ""

nodeSelector: {}

tolerations: []

nameOverride: ""

fullnameOverride: ""

lifecycle: {}

# preStop:

# exec:

# command: ["/bin/sh", "-c", "echo Hello from the postStart handler > /usr/share/message"]

# postStart:

# exec:

# command: ["/bin/sh", "-c", "echo Hello from the postStart handler > /usr/share/message"]

service:

annotations: {}

type: ClusterIP

ports:

- name: beats

port: 5044

protocol: TCP

targetPort: 5045

# - name: http

# port: 8080

# protocol: TCP

# targetPort: 8080

ingress:

enabled: false

# annotations: {}

# hosts:

# - host: logstash.local

# paths:

# - path: /logs

# servicePort: 8080

# tls: []

===========================================================================================

Перейдём к настройке filebeat

filebeat/values.yaml

filebeatConfig:

filebeat.yml: |

filebeat.inputs:

- type: container

paths:

- /var/log/containers/*.log

processors:

- add_kubernetes_metadata:

host: ${NODE_NAME}

matchers:

- logs_path:

logs_path: "/var/log/containers/"

output.logstash:

enabled: true

hosts: ["logstash-logstash:5044"]

полностью файл будет выглядеть следующим образом:

[root@prod-vsrv-kubemaster1 helm-charts]# cat filebeat/values.yaml

---

# Allows you to add any config files in /usr/share/filebeat

# such as filebeat.yml

filebeatConfig:

filebeat.yml: |

filebeat.inputs:

- type: container

paths:

- /var/log/containers/*.log

processors:

- add_kubernetes_metadata:

host: ${NODE_NAME}

matchers:

- logs_path:

logs_path: "/var/log/containers/"

output.logstash:

enabled: true

hosts: ["logstash-logstash:5044"]

# Extra environment variables to append to the DaemonSet pod spec.

# This will be appended to the current 'env:' key. You can use any of the kubernetes env

# syntax here

extraEnvs: []

# - name: MY_ENVIRONMENT_VAR

# value: the_value_goes_here

extraVolumeMounts: []

# - name: extras

# mountPath: /usr/share/extras

# readOnly: true

extraVolumes: []

# - name: extras

# emptyDir: {}

extraContainers: ""

# - name: dummy-init

# image: busybox

# command: ['echo', 'hey']

extraInitContainers: []

# - name: dummy-init

# image: busybox

# command: ['echo', 'hey']

envFrom: []

# - configMapRef:

# name: configmap-name

# Root directory where Filebeat will write data to in order to persist registry data across pod restarts (file position and other metadata).

hostPathRoot: /var/lib

hostNetworking: false

dnsConfig: {}

# options:

# - name: ndots

# value: "2"

image: "docker.elastic.co/beats/filebeat"

imageTag: "7.9.4-SNAPSHOT"

imagePullPolicy: "IfNotPresent"

imagePullSecrets: []

livenessProbe:

exec:

command:

- sh

- -c

- |

#!/usr/bin/env bash -e

curl --fail 127.0.0.1:5066

failureThreshold: 3

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 5

readinessProbe:

exec:

command:

- sh

- -c

- |

#!/usr/bin/env bash -e

filebeat test output

failureThreshold: 3

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 5

# Whether this chart should self-manage its service account, role, and associated role binding.

managedServiceAccount: true

# additionals labels

labels: {}

podAnnotations: {}

# iam.amazonaws.com/role: es-cluster

# Various pod security context settings. Bear in mind that many of these have an impact on Filebeat functioning properly.

#

# - User that the container will execute as. Typically necessary to run as root (0) in order to properly collect host container logs.

# - Whether to execute the Filebeat containers as privileged containers. Typically not necessarily unless running within environments such as OpenShift.

podSecurityContext:

runAsUser: 0

privileged: false

resources:

requests:

cpu: "100m"

memory: "100Mi"

limits:

cpu: "1000m"

memory: "200Mi"

# Custom service account override that the pod will use

serviceAccount: ""

# Annotations to add to the ServiceAccount that is created if the serviceAccount value isn't set.

serviceAccountAnnotations: {}

# eks.amazonaws.com/role-arn: arn:aws:iam::111111111111:role/k8s.clustername.namespace.serviceaccount

# A list of secrets and their paths to mount inside the pod

# This is useful for mounting certificates for security other sensitive values

secretMounts: []

# - name: filebeat-certificates

# secretName: filebeat-certificates

# path: /usr/share/filebeat/certs

# How long to wait for Filebeat pods to stop gracefully

terminationGracePeriod: 30

tolerations: []

nodeSelector: {}

affinity: {}

# This is the PriorityClass settings as defined in

# https://kubernetes.io/docs/concepts/configuration/pod-priority-preemption/#priorityclass

priorityClassName: ""

updateStrategy: RollingUpdate

# Override various naming aspects of this chart

# Only edit these if you know what you're doing

nameOverride: ""

fullnameOverride: ""

==========================================================================================

Filebeat multiline.

Появилась задача: логи с контейнера в котором джава приложение приходят построчно, т.е. каждая строка это отдельный месседж при отображении в kibana, это не удобно читать, чтоб их объеденить в одно сообщение, добавим в filebeat фильтр

multiline.pattern: ‘^([0-9]{4}-[0-9]{2}-[0-9]{2})’

multiline.negate: true

multiline.match: after

в общем виде:

vim filebeat/values.yaml

---

# Allows you to add any config files in /usr/share/filebeat

# such as filebeat.yml

filebeatConfig:

filebeat.yml: |

filebeat.inputs:

- type: container

paths:

- /var/log/containers/*.log

processors:

- add_kubernetes_metadata:

host: ${NODE_NAME}

matchers:

- logs_path:

logs_path: "/var/log/containers/"

multiline.pattern: '^([0-9]{4}-[0-9]{2}-[0-9]{2})'

multiline.negate: true

multiline.match: after

output.logstash:

enabled: true

hosts: ["logstash-logstash:5044"]

и обновляем наш чарт:

helm upgrade —install filebeat -n elk —values filebeat/values.yaml filebeat/

всё теперь логи будут формироваться относительно даты в самом начале сообщения.

===========================================================================================

Дополнение для работы snapshot

Создаём PV который дальше будем подкидывать в template elasticsearch (напоминаю что у меня nfs-provisioner)

cat pvc-snapshot.yml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: elasticsearch-snapshot-dir

namespace: elk

labels:

app: elasticsearch-snapshot

spec:

storageClassName: nfs-storageclass

accessModes:

- ReadWriteMany

resources:

requests:

storage: 5Gi

запускаем

kubectl apply -f pvc-snapshot.yml

смотрим имя созданного PV

kubectl get pv -n elk | grep elk

pvc-30e262ad-770c-45ad-8e3c-28d70a6400ef 5Gi RWX Delete Bound elk/elasticsearch-snapshot-dir nfs-storageclass 21h

вот наше имя

pvc-30e262ad-770c-45ad-8e3c-28d70a6400ef

теперь правим temaplate

vim elasticsearch/templates/statefulset.yaml

volumes:

- name: "pvc-30e262ad-770c-45ad-8e3c-28d70a6400ef"

persistentVolumeClaim:

claimName: elasticsearch-snapshot-dir

И в этом же файле в ещё одном месте:

volumeMounts:

- name: pvc-30e262ad-770c-45ad-8e3c-28d70a6400ef

mountPath: "/snapshot"

на всякий случай файл имеет вид:

cat elasticsearch/templates/statefulset.yaml

---

apiVersion: {{ template "elasticsearch.statefulset.apiVersion" . }}

kind: StatefulSet

metadata:

name: {{ template "elasticsearch.uname" . }}

labels:

heritage: {{ .Release.Service | quote }}

release: {{ .Release.Name | quote }}

chart: "{{ .Chart.Name }}"

app: "{{ template "elasticsearch.uname" . }}"

{{- range $key, $value := .Values.labels }}

{{ $key }}: {{ $value | quote }}

{{- end }}

annotations:

esMajorVersion: "{{ include "elasticsearch.esMajorVersion" . }}"

spec:

serviceName: {{ template "elasticsearch.uname" . }}-headless

selector:

matchLabels:

app: "{{ template "elasticsearch.uname" . }}"

replicas: {{ .Values.replicas }}

podManagementPolicy: {{ .Values.podManagementPolicy }}

updateStrategy:

type: {{ .Values.updateStrategy }}

{{- if .Values.persistence.enabled }}

volumeClaimTemplates:

- metadata:

name: {{ template "elasticsearch.uname" . }}

{{- if .Values.persistence.labels.enabled }}

labels:

heritage: {{ .Release.Service | quote }}

release: {{ .Release.Name | quote }}

chart: "{{ .Chart.Name }}"

app: "{{ template "elasticsearch.uname" . }}"

{{- range $key, $value := .Values.labels }}

{{ $key }}: {{ $value | quote }}

{{- end }}

{{- end }}

{{- with .Values.persistence.annotations }}

annotations:

{{ toYaml . | indent 8 }}

{{- end }}

spec:

{{ toYaml .Values.volumeClaimTemplate | indent 6 }}

{{- end }}

template:

metadata:

name: "{{ template "elasticsearch.uname" . }}"

labels:

heritage: {{ .Release.Service | quote }}

release: {{ .Release.Name | quote }}

chart: "{{ .Chart.Name }}"

app: "{{ template "elasticsearch.uname" . }}"

{{- range $key, $value := .Values.labels }}

{{ $key }}: {{ $value | quote }}

{{- end }}

annotations:

{{- range $key, $value := .Values.podAnnotations }}

{{ $key }}: {{ $value | quote }}

{{- end }}

{{/* This forces a restart if the configmap has changed */}}

{{- if .Values.esConfig }}

configchecksum: {{ include (print .Template.BasePath "/configmap.yaml") . | sha256sum | trunc 63 }}

{{- end }}

spec:

{{- if .Values.schedulerName }}

schedulerName: "{{ .Values.schedulerName }}"

{{- end }}

securityContext:

{{ toYaml .Values.podSecurityContext | indent 8 }}

{{- if .Values.fsGroup }}

fsGroup: {{ .Values.fsGroup }} # Deprecated value, please use .Values.podSecurityContext.fsGroup

{{- end }}

{{- if .Values.rbac.create }}

serviceAccountName: "{{ template "elasticsearch.uname" . }}"

{{- else if not (eq .Values.rbac.serviceAccountName "") }}

serviceAccountName: {{ .Values.rbac.serviceAccountName | quote }}

{{- end }}

{{- with .Values.tolerations }}

tolerations:

{{ toYaml . | indent 6 }}

{{- end }}

{{- with .Values.nodeSelector }}

nodeSelector:

{{ toYaml . | indent 8 }}

{{- end }}

{{- if or (eq .Values.antiAffinity "hard") (eq .Values.antiAffinity "soft") .Values.nodeAffinity }}

{{- if .Values.priorityClassName }}

priorityClassName: {{ .Values.priorityClassName }}

{{- end }}

affinity:

{{- end }}

{{- if eq .Values.antiAffinity "hard" }}

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- "{{ template "elasticsearch.uname" .}}"

topologyKey: {{ .Values.antiAffinityTopologyKey }}

{{- else if eq .Values.antiAffinity "soft" }}

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 1

podAffinityTerm:

topologyKey: {{ .Values.antiAffinityTopologyKey }}

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- "{{ template "elasticsearch.uname" . }}"

{{- end }}

{{- with .Values.nodeAffinity }}

nodeAffinity:

{{ toYaml . | indent 10 }}

{{- end }}

terminationGracePeriodSeconds: {{ .Values.terminationGracePeriod }}

volumes:

- name: "pvc-30e262ad-770c-45ad-8e3c-28d70a6400ef"

persistentVolumeClaim:

claimName: elasticsearch-snapshot-dir

{{- range .Values.secretMounts }}

- name: {{ .name }}

secret:

secretName: {{ .secretName }}

{{- if .defaultMode }}

defaultMode: {{ .defaultMode }}

{{- end }}

{{- end }}

{{- if .Values.esConfig }}

- name: esconfig

configMap:

name: {{ template "elasticsearch.uname" . }}-config

{{- end }}

{{- if .Values.keystore }}

- name: keystore

emptyDir: {}

{{- range .Values.keystore }}

- name: keystore-{{ .secretName }}

secret: {{ toYaml . | nindent 12 }}

{{- end }}

{{ end }}

{{- if .Values.extraVolumes }}

# Currently some extra blocks accept strings

# to continue with backwards compatibility this is being kept

# whilst also allowing for yaml to be specified too.

{{- if eq "string" (printf "%T" .Values.extraVolumes) }}

{{ tpl .Values.extraVolumes . | indent 8 }}

{{- else }}

{{ toYaml .Values.extraVolumes | indent 8 }}

{{- end }}

{{- end }}

{{- if .Values.imagePullSecrets }}

imagePullSecrets:

{{ toYaml .Values.imagePullSecrets | indent 8 }}

{{- end }}

{{- if semverCompare ">1.13-0" .Capabilities.KubeVersion.GitVersion }}

enableServiceLinks: {{ .Values.enableServiceLinks }}

{{- end }}

initContainers:

{{- if .Values.sysctlInitContainer.enabled }}

- name: configure-sysctl

securityContext:

runAsUser: 0

privileged: true

image: "{{ .Values.image }}:{{ .Values.imageTag }}"

imagePullPolicy: "{{ .Values.imagePullPolicy }}"

command: ["sysctl", "-w", "vm.max_map_count={{ .Values.sysctlVmMaxMapCount}}"]

resources:

{{ toYaml .Values.initResources | indent 10 }}

{{- end }}

{{ if .Values.keystore }}

- name: keystore

image: "{{ .Values.image }}:{{ .Values.imageTag }}"

imagePullPolicy: "{{ .Values.imagePullPolicy }}"

command:

- sh

- -c

- |

#!/usr/bin/env bash

set -euo pipefail

elasticsearch-keystore create

for i in /tmp/keystoreSecrets/*/*; do

key=$(basename $i)

echo "Adding file $i to keystore key $key"

elasticsearch-keystore add-file "$key" "$i"

done

# Add the bootstrap password since otherwise the Elasticsearch entrypoint tries to do this on startup

if [ ! -z ${ELASTIC_PASSWORD+x} ]; then

echo 'Adding env $ELASTIC_PASSWORD to keystore as key bootstrap.password'

echo "$ELASTIC_PASSWORD" | elasticsearch-keystore add -x bootstrap.password

fi

cp -a /usr/share/elasticsearch/config/elasticsearch.keystore /tmp/keystore/

env: {{ toYaml .Values.extraEnvs | nindent 10 }}

envFrom: {{ toYaml .Values.envFrom | nindent 10 }}

resources: {{ toYaml .Values.initResources | nindent 10 }}

volumeMounts:

- name: keystore

mountPath: /tmp/keystore

{{- range .Values.keystore }}

- name: keystore-{{ .secretName }}

mountPath: /tmp/keystoreSecrets/{{ .secretName }}

{{- end }}

{{ end }}

{{- if .Values.extraInitContainers }}

# Currently some extra blocks accept strings

# to continue with backwards compatibility this is being kept

# whilst also allowing for yaml to be specified too.

{{- if eq "string" (printf "%T" .Values.extraInitContainers) }}

{{ tpl .Values.extraInitContainers . | indent 6 }}

{{- else }}

{{ toYaml .Values.extraInitContainers | indent 6 }}

{{- end }}

{{- end }}

containers:

- name: "{{ template "elasticsearch.name" . }}"

securityContext:

{{ toYaml .Values.securityContext | indent 10 }}

image: "{{ .Values.image }}:{{ .Values.imageTag }}"

imagePullPolicy: "{{ .Values.imagePullPolicy }}"

readinessProbe:

exec:

command:

- sh

- -c

- |

#!/usr/bin/env bash -e

# If the node is starting up wait for the cluster to be ready (request params: "{{ .Values.clusterHealthCheckParams }}" )

# Once it has started only check that the node itself is responding

START_FILE=/tmp/.es_start_file

# Disable nss cache to avoid filling dentry cache when calling curl

# This is required with Elasticsearch Docker using nss < 3.52

export NSS_SDB_USE_CACHE=no

http () {

local path="${1}"

local args="${2}"

set -- -XGET -s

if [ "$args" != "" ]; then

set -- "$@" $args

fi

if [ -n "${ELASTIC_USERNAME}" ] && [ -n "${ELASTIC_PASSWORD}" ]; then

set -- "$@" -u "${ELASTIC_USERNAME}:${ELASTIC_PASSWORD}"

fi

curl --output /dev/null -k "$@" "{{ .Values.protocol }}://127.0.0.1:{{ .Values.httpPort }}${path}"

}

if [ -f "${START_FILE}" ]; then

echo 'Elasticsearch is already running, lets check the node is healthy'

HTTP_CODE=$(http "/" "-w %{http_code}")

RC=$?

if [[ ${RC} -ne 0 ]]; then

echo "curl --output /dev/null -k -XGET -s -w '%{http_code}' \${BASIC_AUTH} {{ .Values.protocol }}://127.0.0.1:{{ .Values.httpPort }}/ failed with RC ${RC}"

exit ${RC}

fi

# ready if HTTP code 200, 503 is tolerable if ES version is 6.x

if [[ ${HTTP_CODE} == "200" ]]; then

exit 0

elif [[ ${HTTP_CODE} == "503" && "{{ include "elasticsearch.esMajorVersion" . }}" == "6" ]]; then

exit 0

else

echo "curl --output /dev/null -k -XGET -s -w '%{http_code}' \${BASIC_AUTH} {{ .Values.protocol }}://127.0.0.1:{{ .Values.httpPort }}/ failed with HTTP code ${HTTP_CODE}"

exit 1

fi

else

echo 'Waiting for elasticsearch cluster to become ready (request params: "{{ .Values.clusterHealthCheckParams }}" )'

if http "/_cluster/health?{{ .Values.clusterHealthCheckParams }}" "--fail" ; then

touch ${START_FILE}

exit 0

else

echo 'Cluster is not yet ready (request params: "{{ .Values.clusterHealthCheckParams }}" )'

exit 1

fi

fi

{{ toYaml .Values.readinessProbe | indent 10 }}

ports:

- name: http

containerPort: {{ .Values.httpPort }}

- name: transport

containerPort: {{ .Values.transportPort }}

resources:

{{ toYaml .Values.resources | indent 10 }}

env:

- name: node.name

valueFrom:

fieldRef:

fieldPath: metadata.name

{{- if eq .Values.roles.master "true" }}

{{- if ge (int (include "elasticsearch.esMajorVersion" .)) 7 }}

- name: cluster.initial_master_nodes

value: "{{ template "elasticsearch.endpoints" . }}"

{{- else }}

- name: discovery.zen.minimum_master_nodes

value: "{{ .Values.minimumMasterNodes }}"

{{- end }}

{{- end }}

{{- if lt (int (include "elasticsearch.esMajorVersion" .)) 7 }}

- name: discovery.zen.ping.unicast.hosts

value: "{{ template "elasticsearch.masterService" . }}-headless"

{{- else }}

- name: discovery.seed_hosts

value: "{{ template "elasticsearch.masterService" . }}-headless"

{{- end }}

- name: cluster.name

value: "{{ .Values.clusterName }}"

- name: network.host

value: "{{ .Values.networkHost }}"

- name: ES_JAVA_OPTS

value: "{{ .Values.esJavaOpts }}"

{{- range $role, $enabled := .Values.roles }}

- name: node.{{ $role }}

value: "{{ $enabled }}"

{{- end }}

{{- if .Values.extraEnvs }}

{{ toYaml .Values.extraEnvs | indent 10 }}

{{- end }}

{{- if .Values.envFrom }}

envFrom:

{{ toYaml .Values.envFrom | indent 10 }}

{{- end }}

volumeMounts:

- name: pvc-30e262ad-770c-45ad-8e3c-28d70a6400ef

mountPath: "/snapshot"

{{- if .Values.persistence.enabled }}

- name: "{{ template "elasticsearch.uname" . }}"

mountPath: /usr/share/elasticsearch/data

{{- end }}

{{ if .Values.keystore }}

- name: keystore

mountPath: /usr/share/elasticsearch/config/elasticsearch.keystore

subPath: elasticsearch.keystore

{{ end }}

{{- range .Values.secretMounts }}

- name: {{ .name }}

mountPath: {{ .path }}

{{- if .subPath }}

subPath: {{ .subPath }}

{{- end }}

{{- end }}

{{- range $path, $config := .Values.esConfig }}

- name: esconfig

mountPath: /usr/share/elasticsearch/config/{{ $path }}

subPath: {{ $path }}

{{- end -}}

{{- if .Values.extraVolumeMounts }}

# Currently some extra blocks accept strings

# to continue with backwards compatibility this is being kept

# whilst also allowing for yaml to be specified too.

{{- if eq "string" (printf "%T" .Values.extraVolumeMounts) }}

{{ tpl .Values.extraVolumeMounts . | indent 10 }}

{{- else }}

{{ toYaml .Values.extraVolumeMounts | indent 10 }}

{{- end }}

{{- end }}

{{- if .Values.masterTerminationFix }}

{{- if eq .Values.roles.master "true" }}

# This sidecar will prevent slow master re-election

# https://github.com/elastic/helm-charts/issues/63

- name: elasticsearch-master-graceful-termination-handler

image: "{{ .Values.image }}:{{ .Values.imageTag }}"

imagePullPolicy: "{{ .Values.imagePullPolicy }}"

command:

- "sh"

- -c

- |

#!/usr/bin/env bash

set -eo pipefail

http () {

local path="${1}"

if [ -n "${ELASTIC_USERNAME}" ] && [ -n "${ELASTIC_PASSWORD}" ]; then

BASIC_AUTH="-u ${ELASTIC_USERNAME}:${ELASTIC_PASSWORD}"

else

BASIC_AUTH=''

fi

curl -XGET -s -k --fail ${BASIC_AUTH} {{ .Values.protocol }}://{{ template "elasticsearch.masterService" . }}:{{ .Values.httpPort }}${path}

}

cleanup () {

while true ; do

local master="$(http "/_cat/master?h=node" || echo "")"

if [[ $master == "{{ template "elasticsearch.masterService" . }}"* && $master != "${NODE_NAME}" ]]; then

echo "This node is not master."

break

fi

echo "This node is still master, waiting gracefully for it to step down"

sleep 1

done

exit 0

}

trap cleanup SIGTERM

sleep infinity &

wait $!

resources:

{{ toYaml .Values.sidecarResources | indent 10 }}

env:

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

{{- if .Values.extraEnvs }}

{{ toYaml .Values.extraEnvs | indent 10 }}

{{- end }}

{{- if .Values.envFrom }}

envFrom:

{{ toYaml .Values.envFrom | indent 10 }}

{{- end }}

{{- end }}

{{- end }}

{{- if .Values.lifecycle }}

lifecycle:

{{ toYaml .Values.lifecycle | indent 10 }}

{{- end }}

{{- if .Values.extraContainers }}

# Currently some extra blocks accept strings

# to continue with backwards compatibility this is being kept

# whilst also allowing for yaml to be specified too.

{{- if eq "string" (printf "%T" .Values.extraContainers) }}

{{ tpl .Values.extraContainers . | indent 6 }}

{{- else }}

{{ toYaml .Values.extraContainers | indent 6 }}

{{- end }}

{{- end }}

===========================================================================================

Начинаем установку:

[root@prod-vsrv-kubemaster1 helm-charts]# helm install elasticsearch -n elk —values elasticsearch/values.yaml elasticsearch/

[root@prod-vsrv-kubemaster1 helm-charts]# helm install kibana -n elk —values kibana/values.yaml kibana/

ждём когда запустится кибана и переходим по домену указанному в ingress у кибана в переменных

Сюда вбиваем наши логин и пароль из секрета созданного в самом начале, напомню elastic elastic

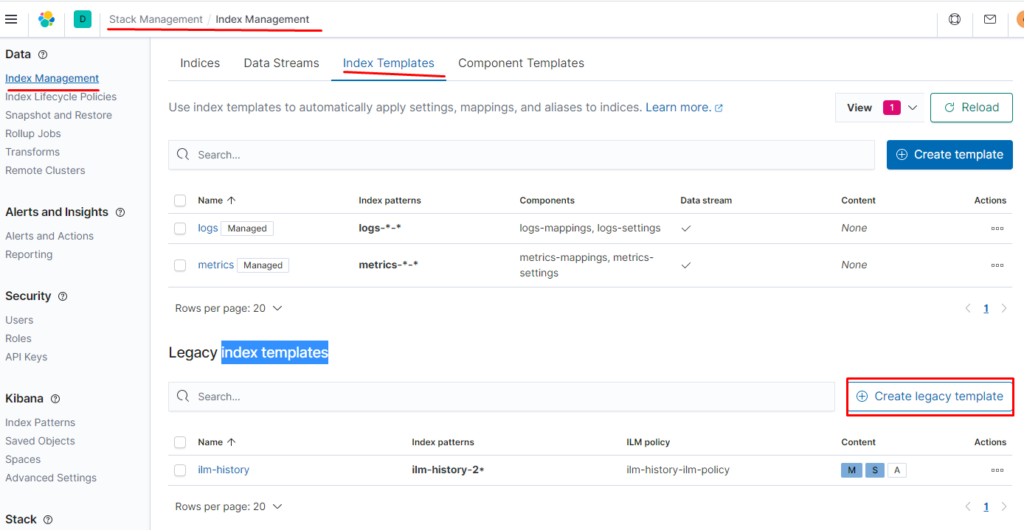

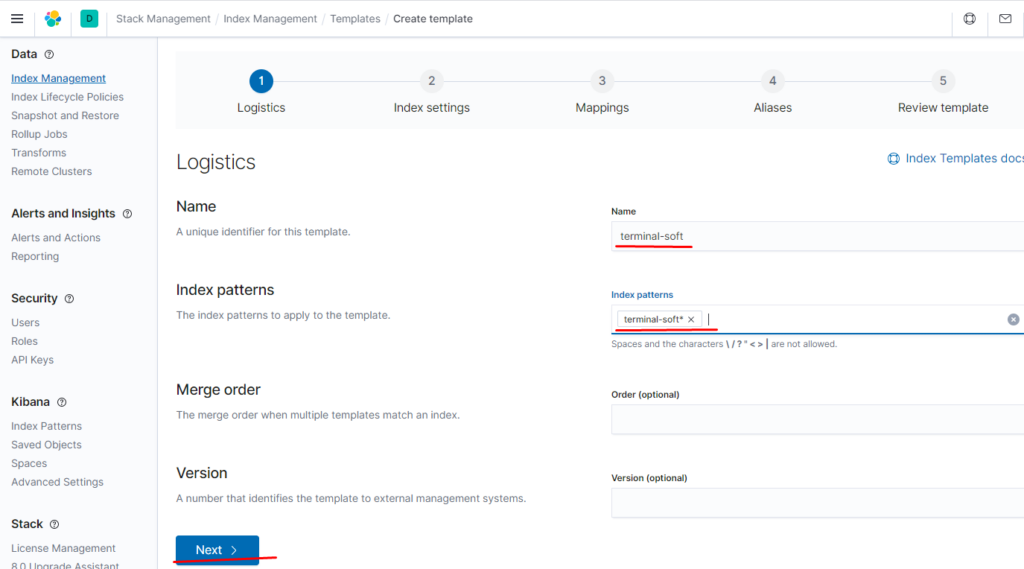

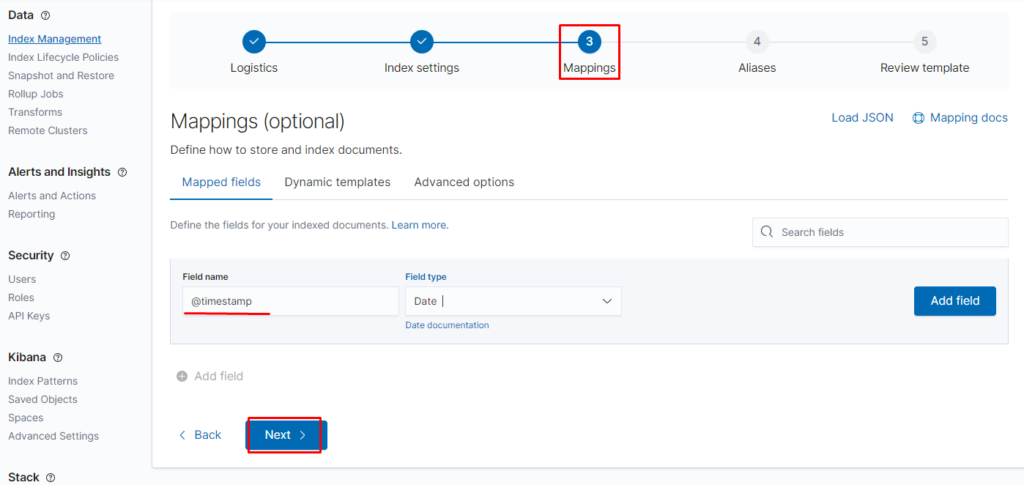

Переходим в

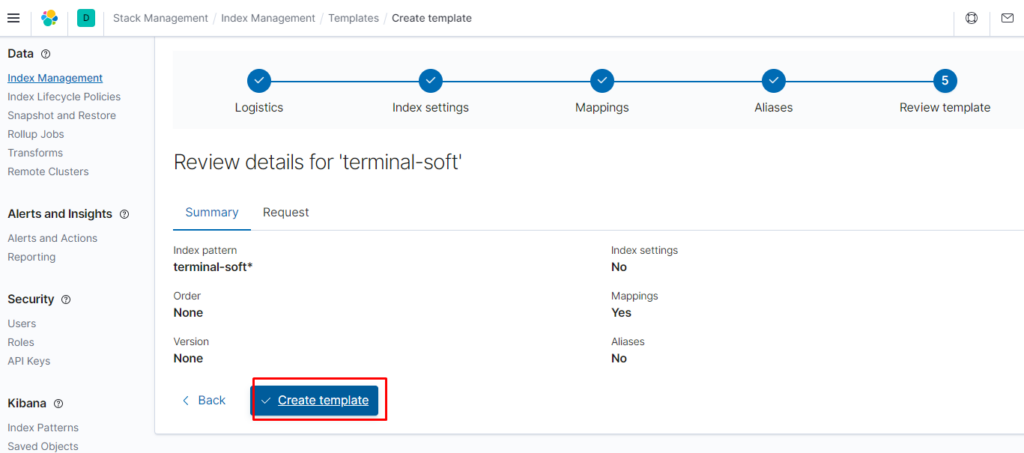

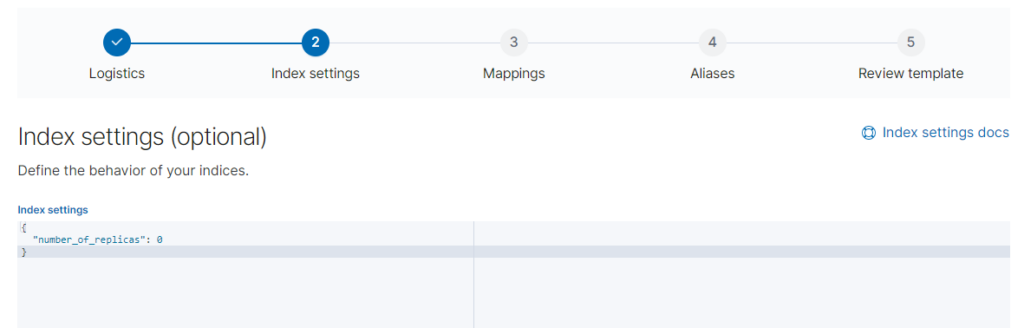

Stack Management Index Management index templates

на втором пункте нажимаем next, на третьем пишем @timespamp и выбираем date

потом нажимаем next далее:

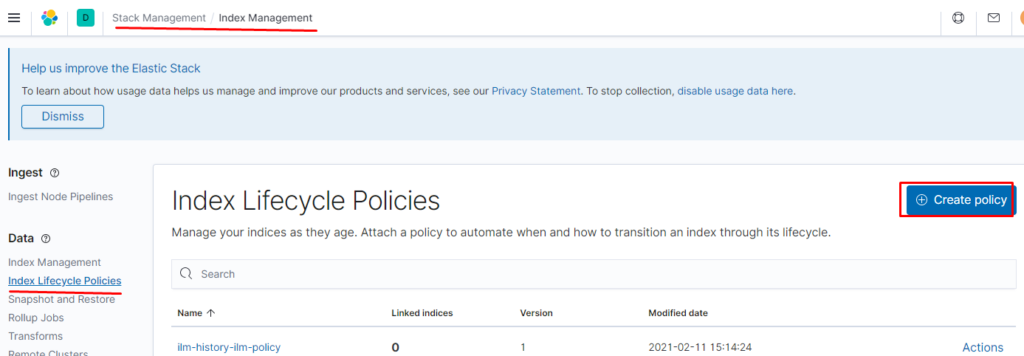

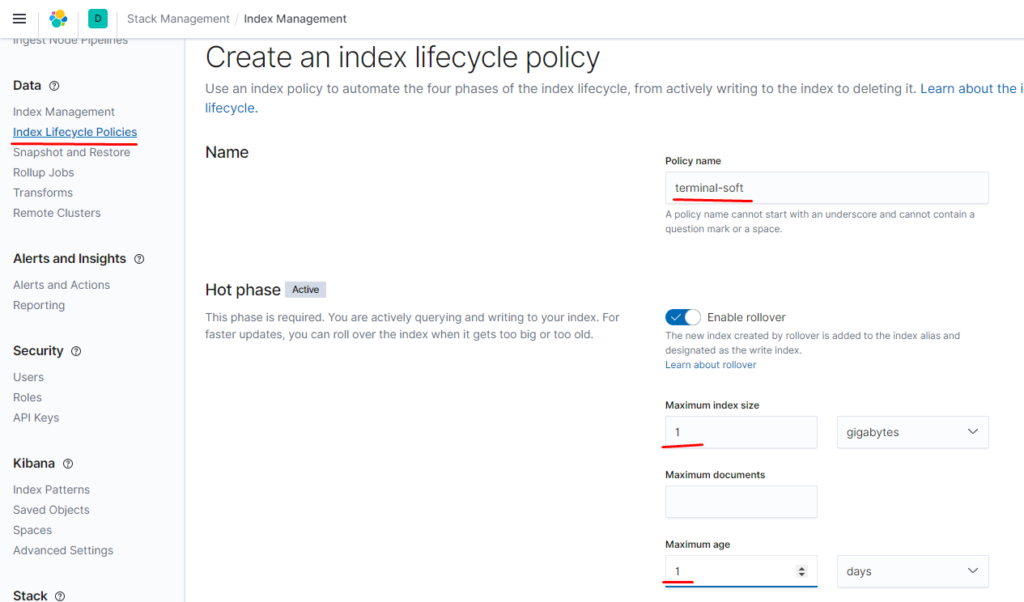

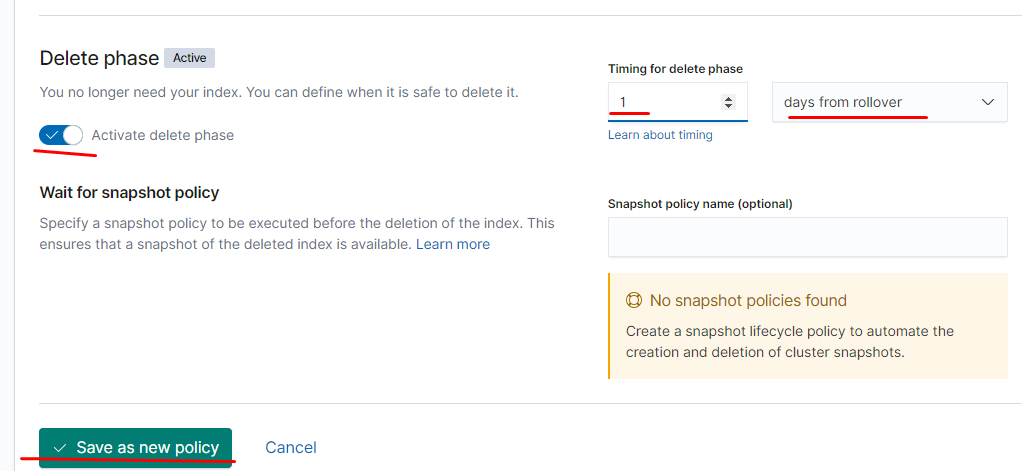

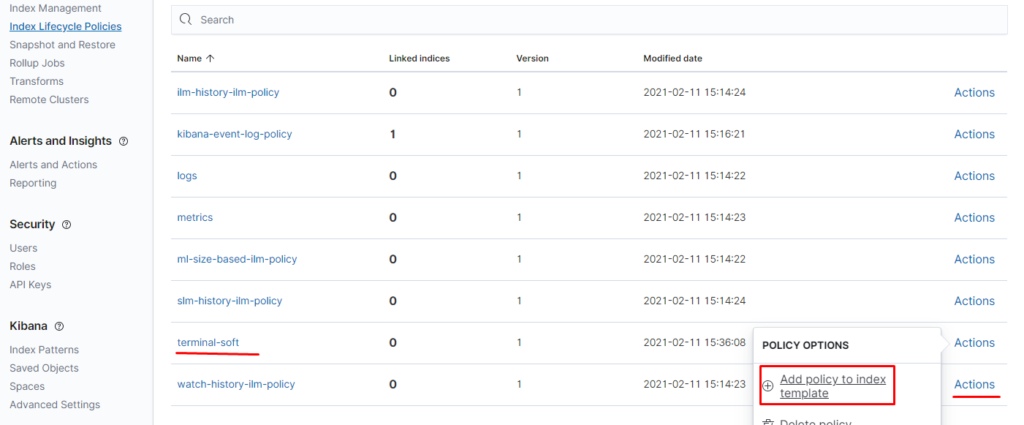

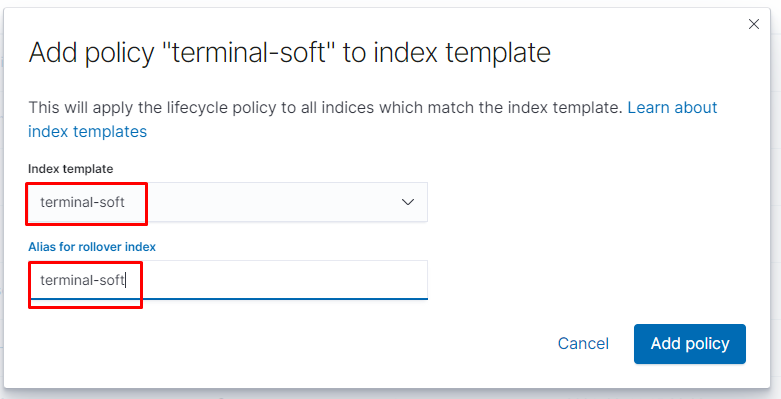

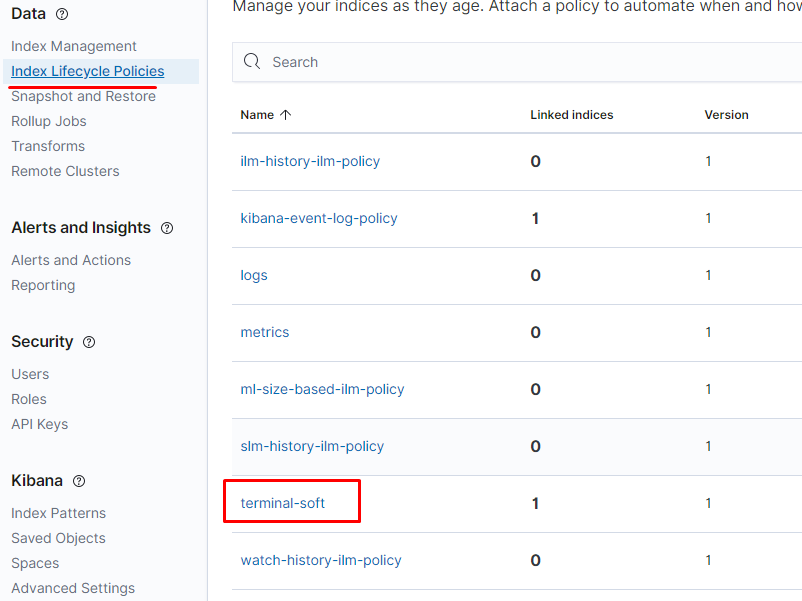

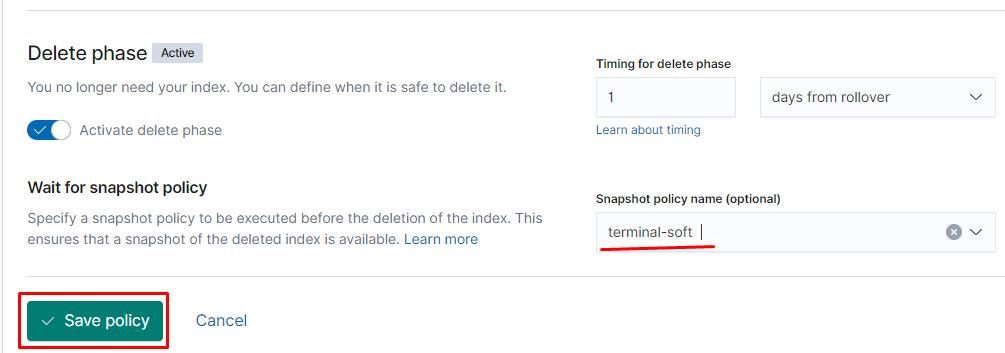

теперь создаём политику на ролловер и удаление

Запускаем logstash и filebeat настраиваем snapshot

[root@prod-vsrv-kubemaster1 helm-charts]# helm install logstash -n elk —values logstash/values.yaml logstash/

[root@prod-vsrv-kubemaster1 helm-charts]# helm install filebeat -n elk —values filebeat/values.yaml filebeat/

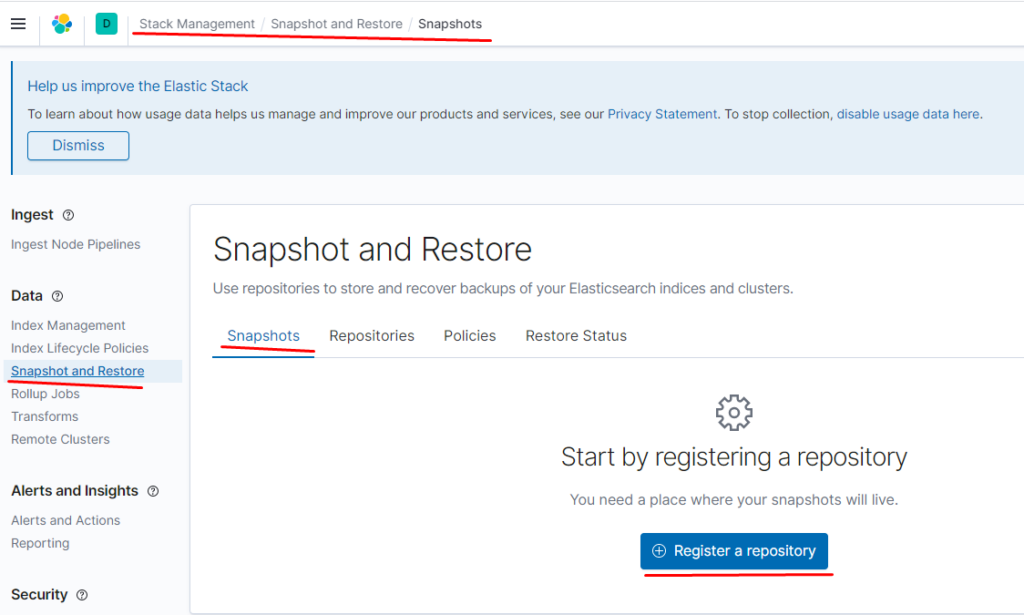

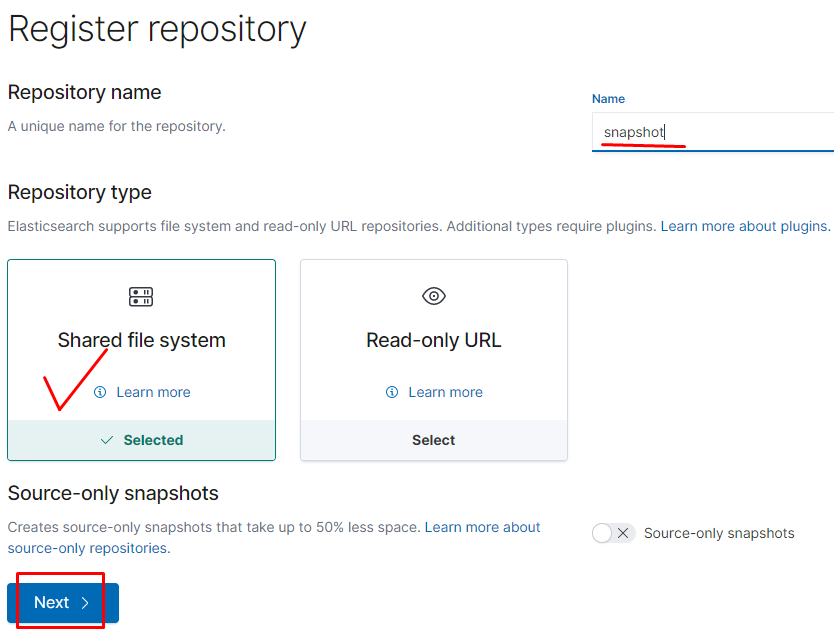

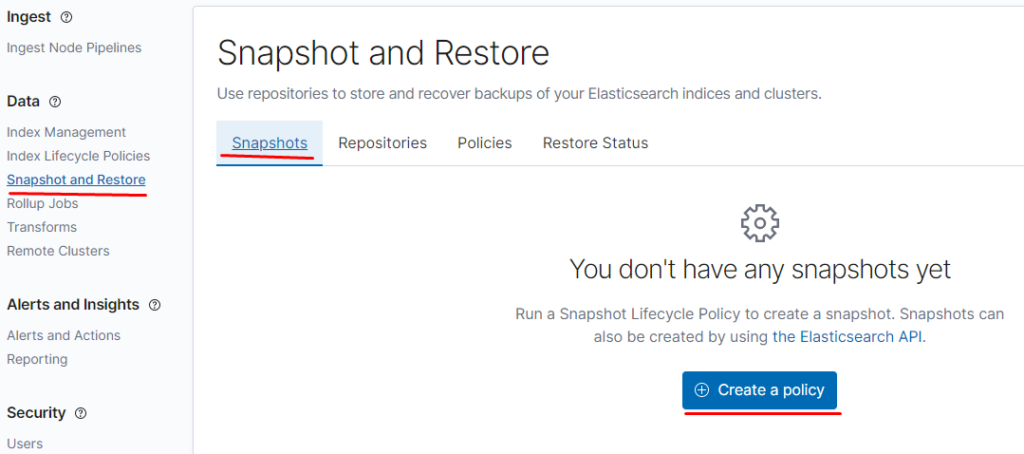

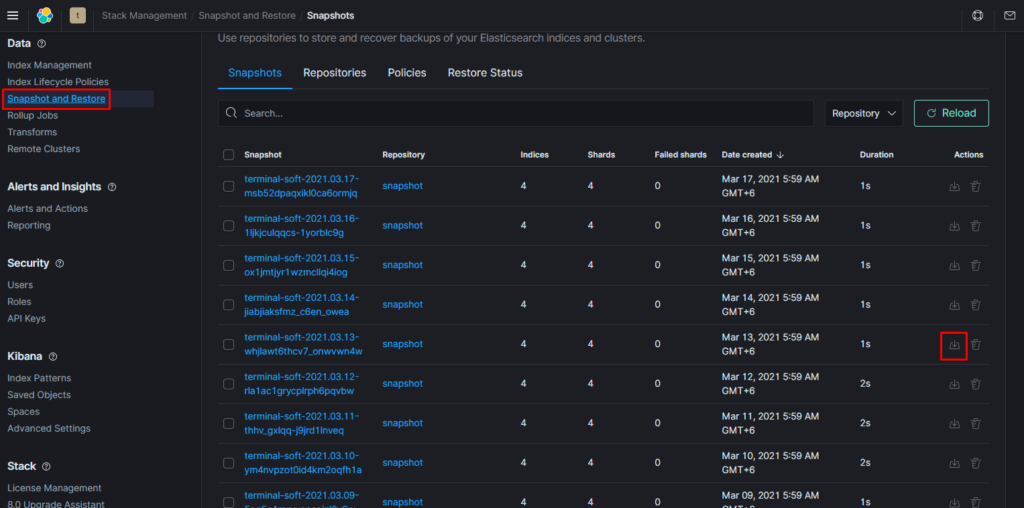

теперь создаём репозиторий для снапшотов и их политику

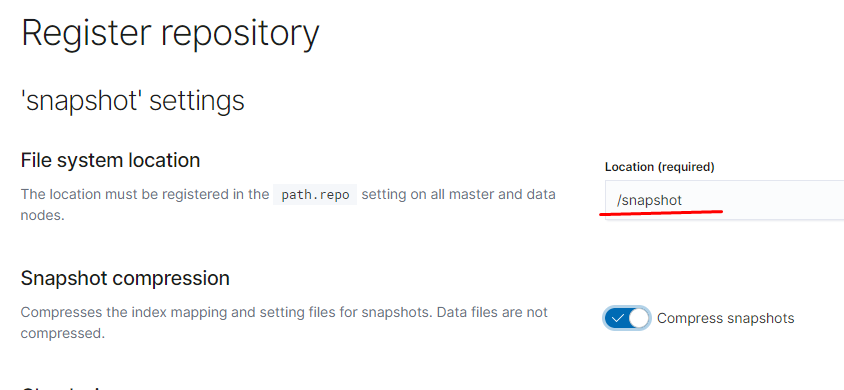

тут указываем нашу директорию /snapshot она подключалась через отдельный вольюм

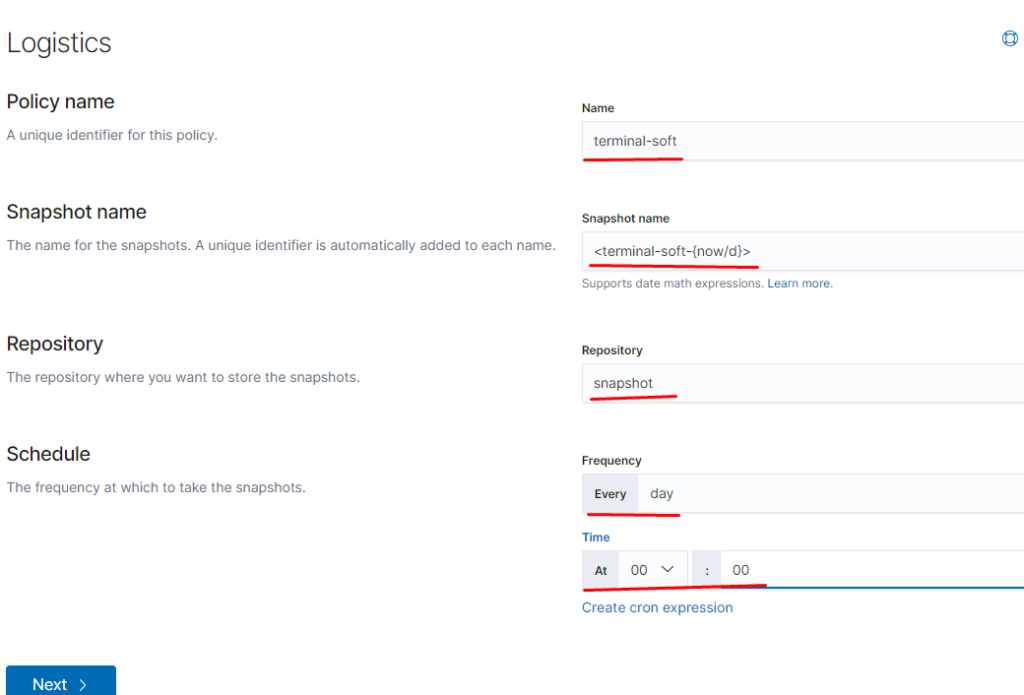

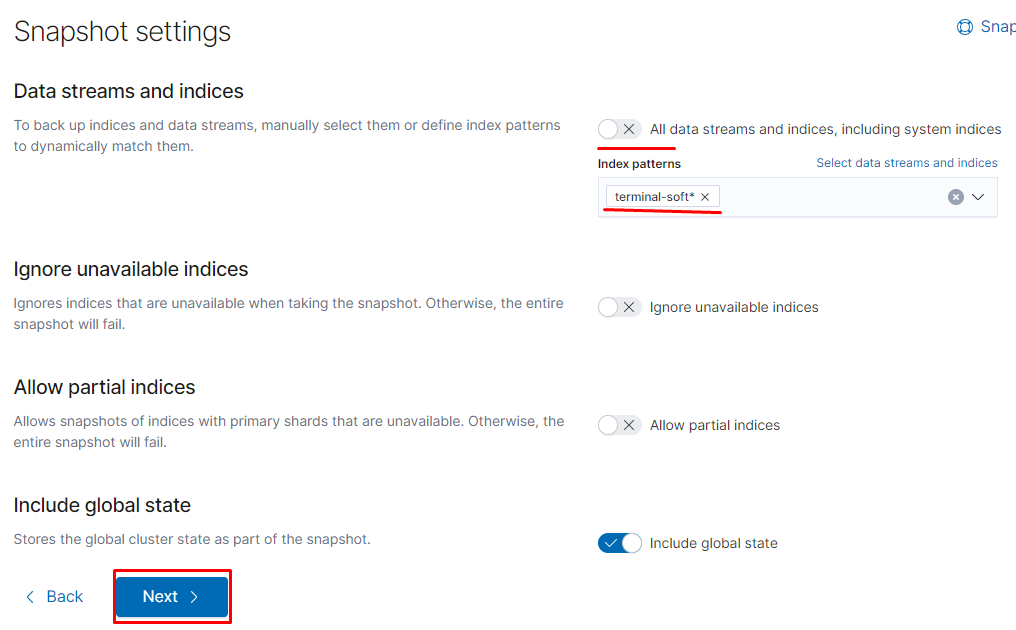

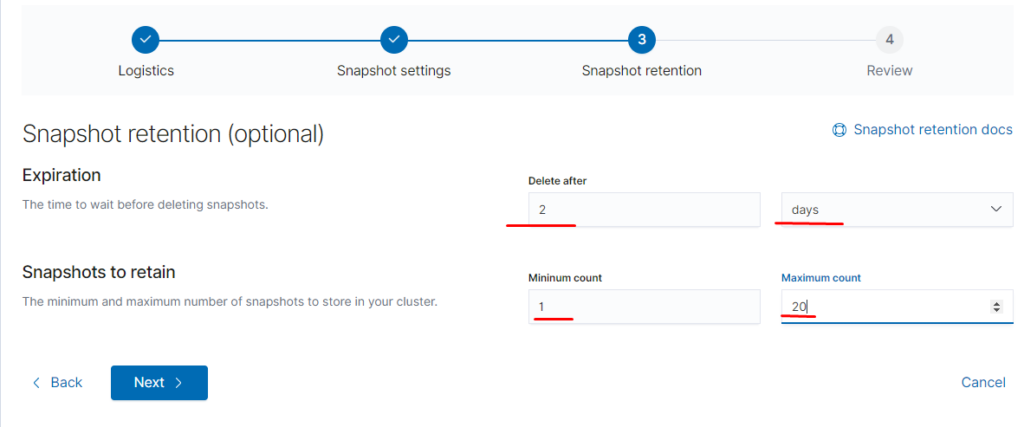

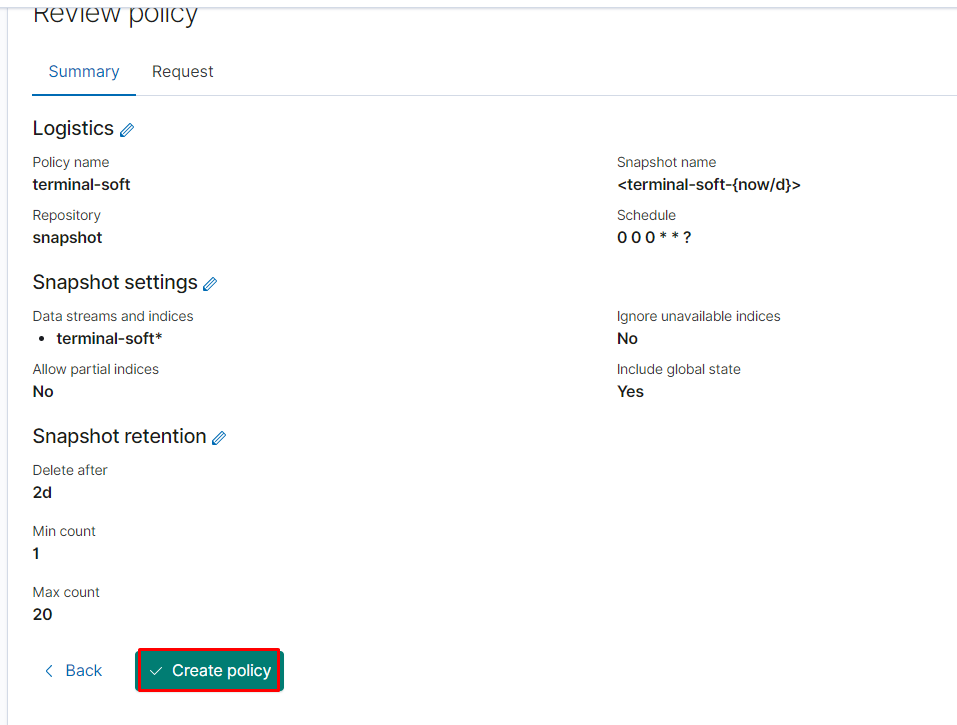

далее вводим

name terminal-soft

snapshot name: <terminal-soft-{now/d}> (кавычки обязательны)

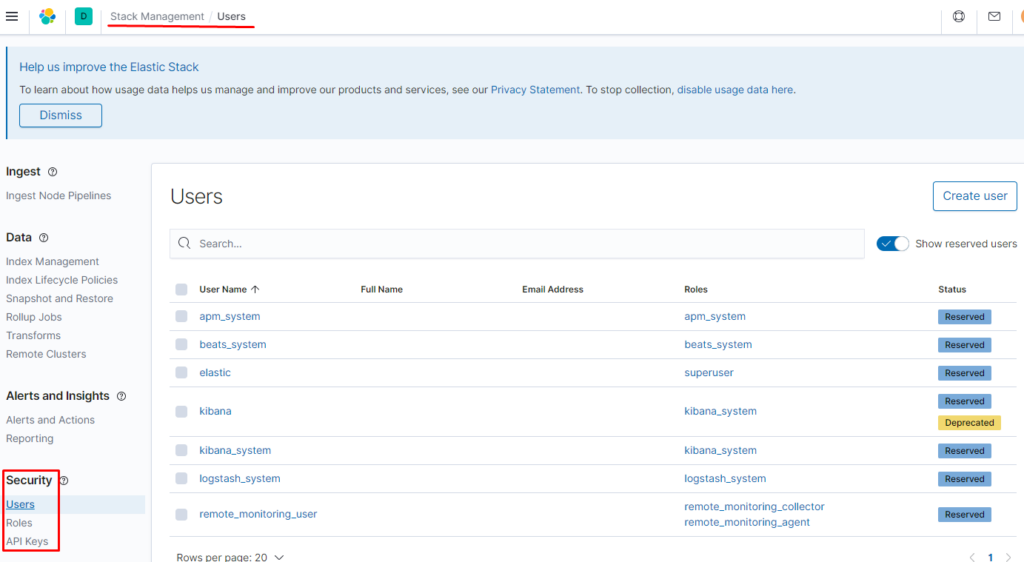

Роли и пользователей можно настраивать тут:

======================================================================================================================================================

запускаем elastic в 1 instance

vim elasticsearch/values.yaml

replicas: 1

minimumMasterNodes: 1[root@prod-vsrv-kubemaster1 helm-charts-7.9.4.elasticsearch-1-pod]# helm install elasticsearch -n elk —values elasticsearch/values.yaml elasticsearch/

[root@prod-vsrv-kubemaster1 helm-charts-7.9.4.elasticsearch-1-pod]# helm install kibana -n elk —values kibana/values.yaml kibana/

Создаём в kibana так же темплейт

Но задаём параметр number_of_replicas»: «0»

после запускаем logstash filebeat

[root@prod-vsrv-kubemaster1 helm-charts-7.9.4.elasticsearch-1-pod]# helm install logstash -n elk —values logstash/values.yaml logstash/

[root@prod-vsrv-kubemaster1 helm-charts-7.9.4.elasticsearch-1-pod]# helm install filebeat -n elk —values filebeat/values.yaml filebeat/

После этого настраиваем snapshot

====================================================================================================

Настройка доступов пользователю для индексов.

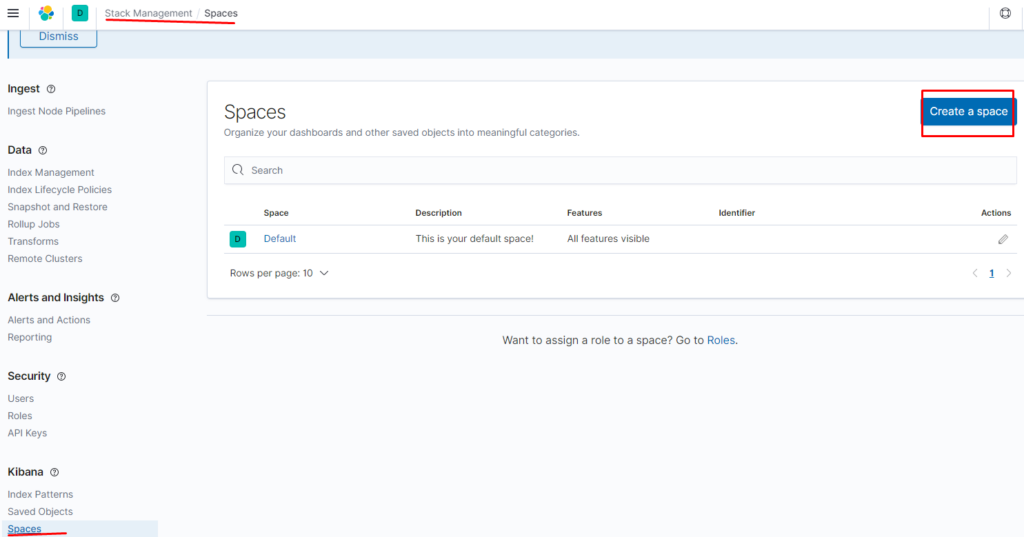

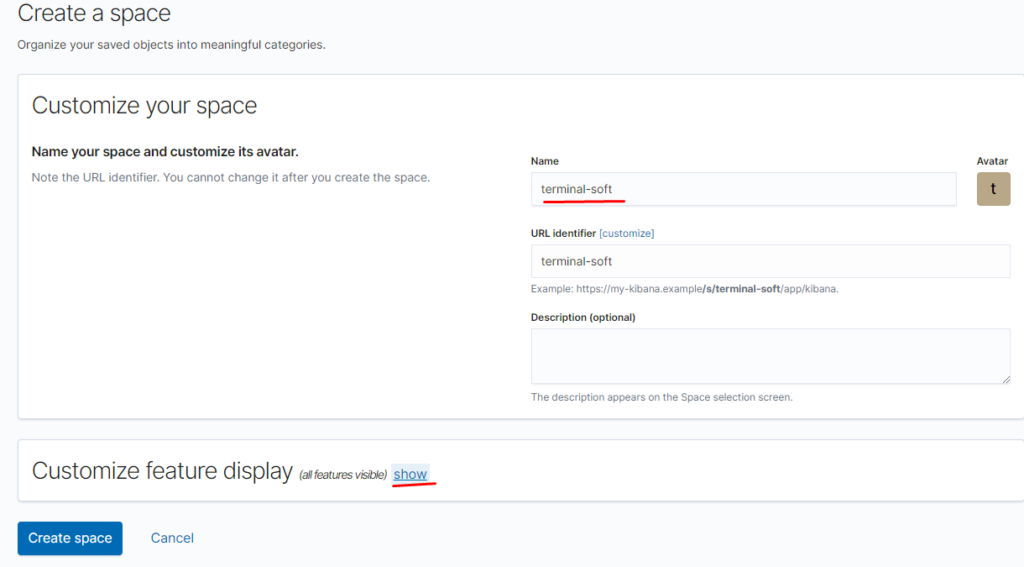

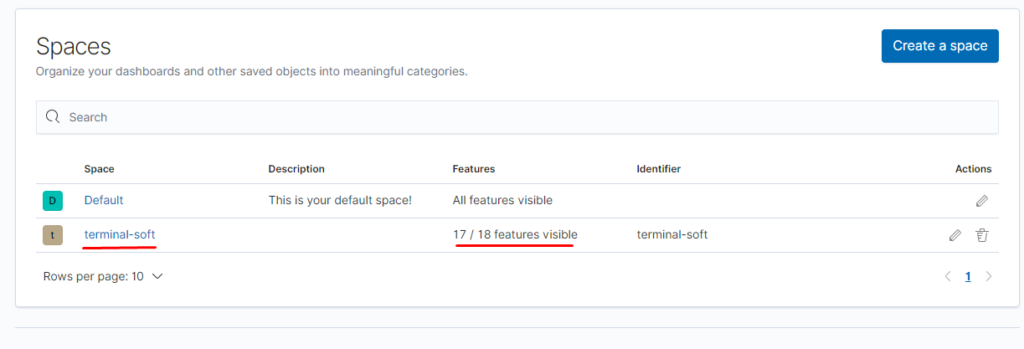

Создаём пространство:

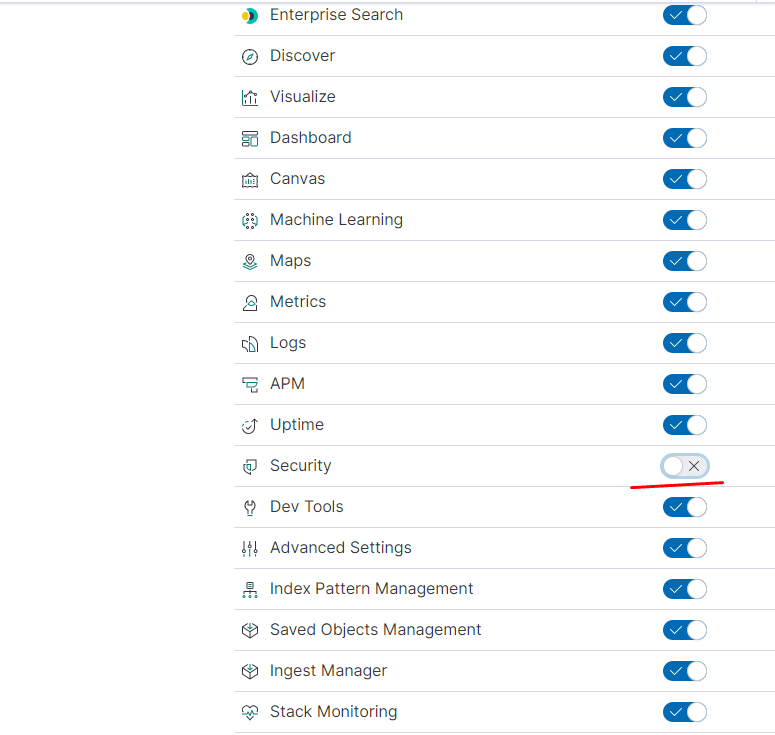

указываем — что должно отображаться в пространстве:

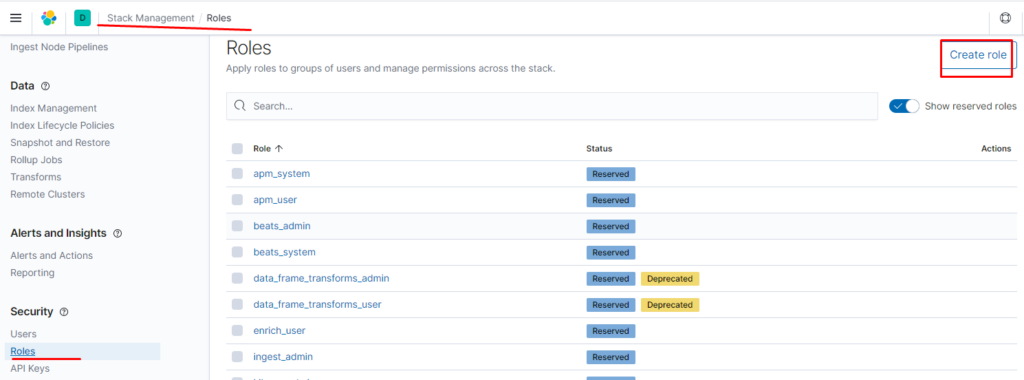

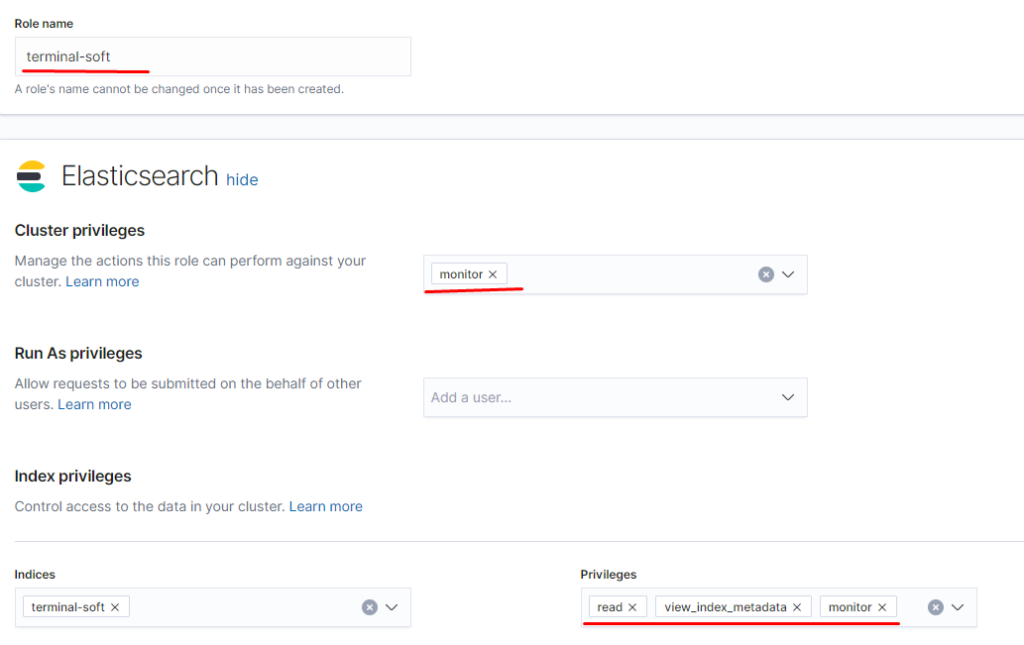

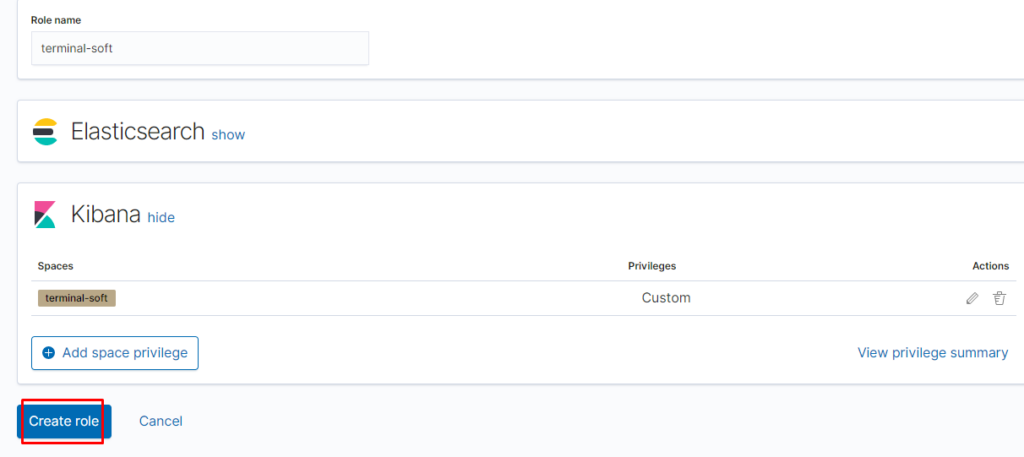

Создаём роль для нашего индекса:

указываем привилегии как для кластера так и непосредственно для индекса terminal-soft

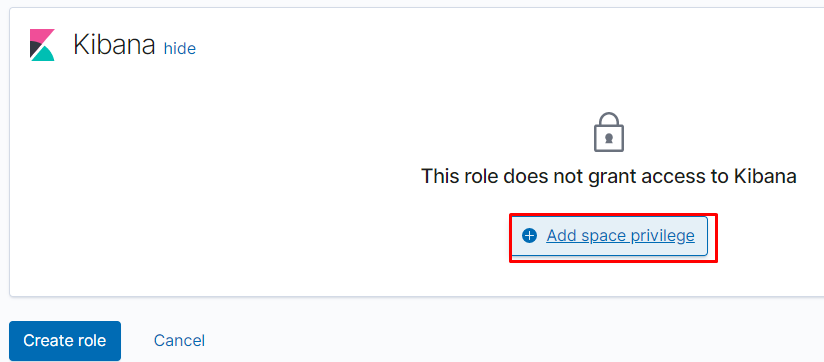

добавляем привилегии для пространства:

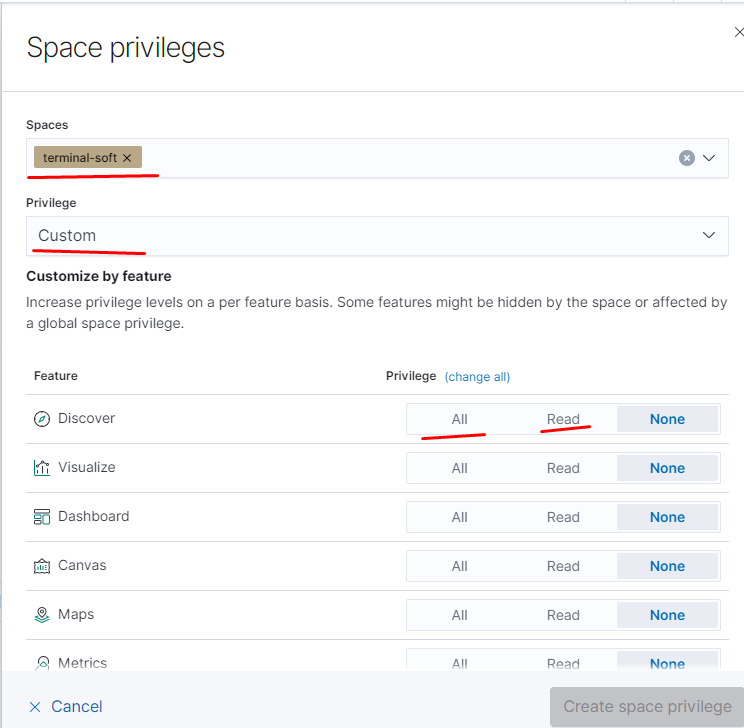

настраиваем доступы для пространства, — чтение/полный доступ/отключить

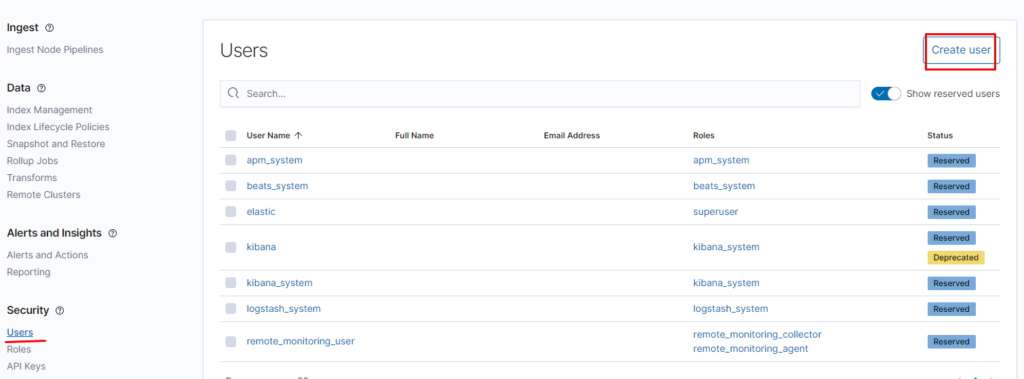

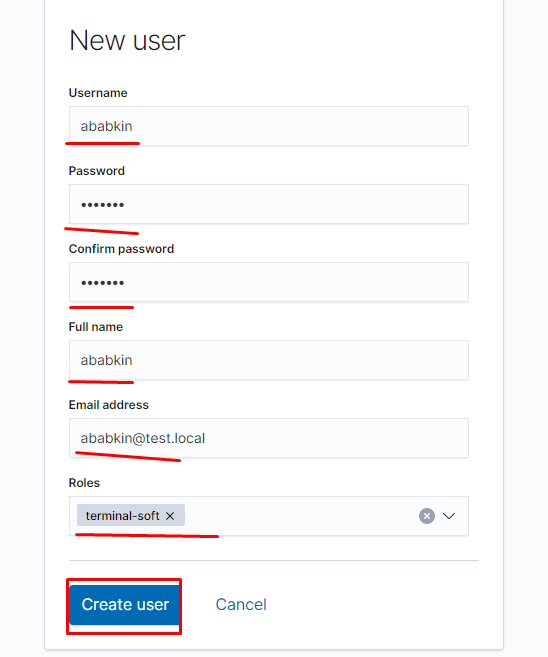

Создаём пользователя:

задаём пароль и созданную нами ранее роль:

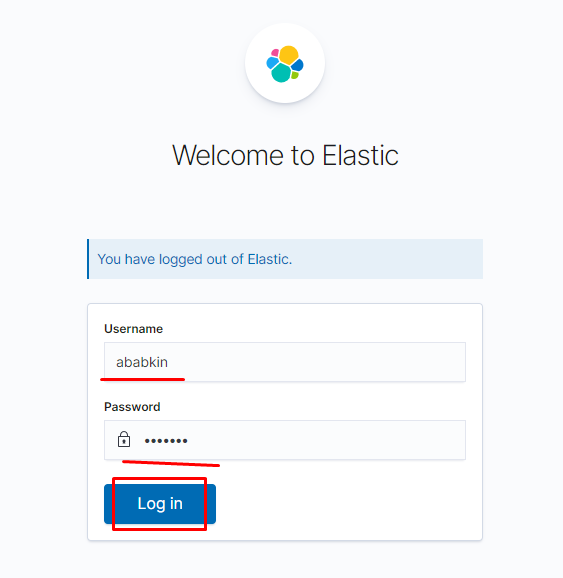

Логинимся под нашим новым пользователем

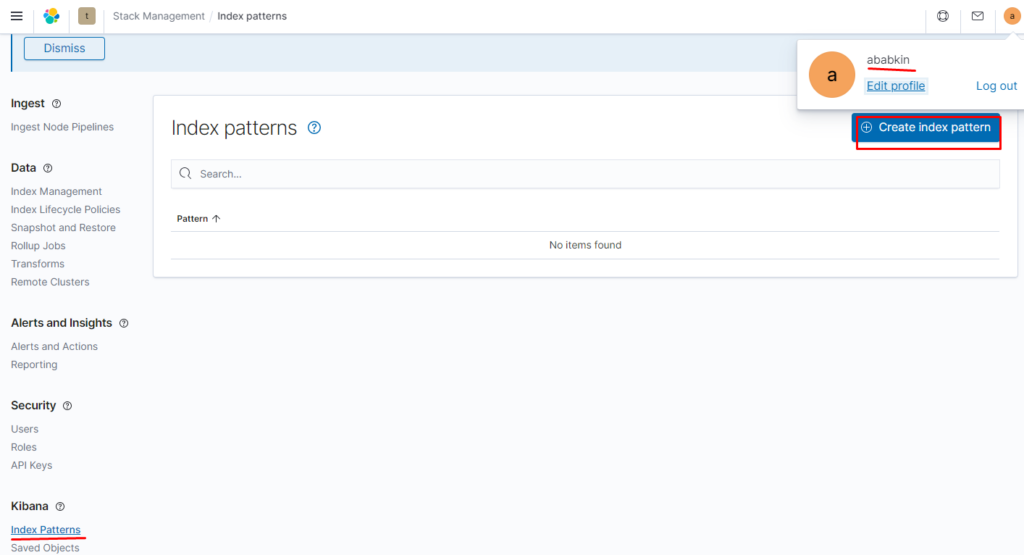

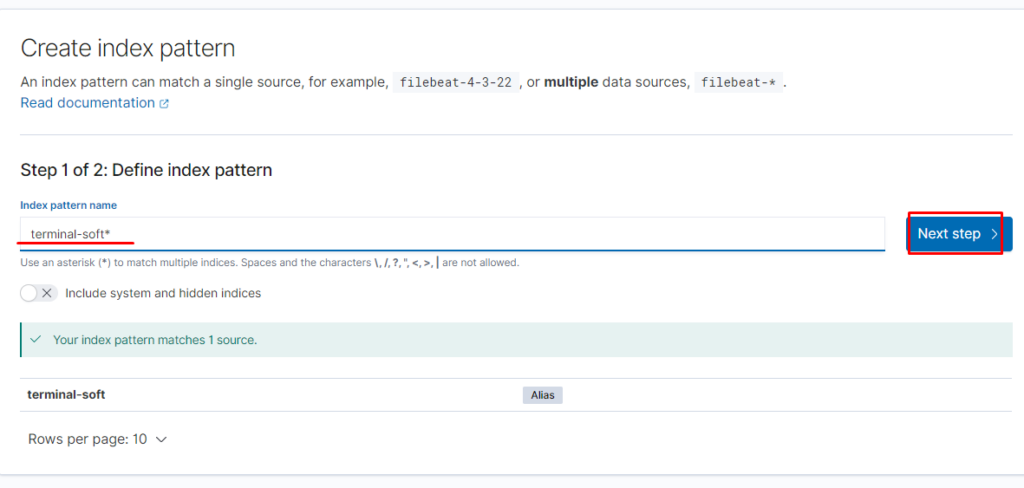

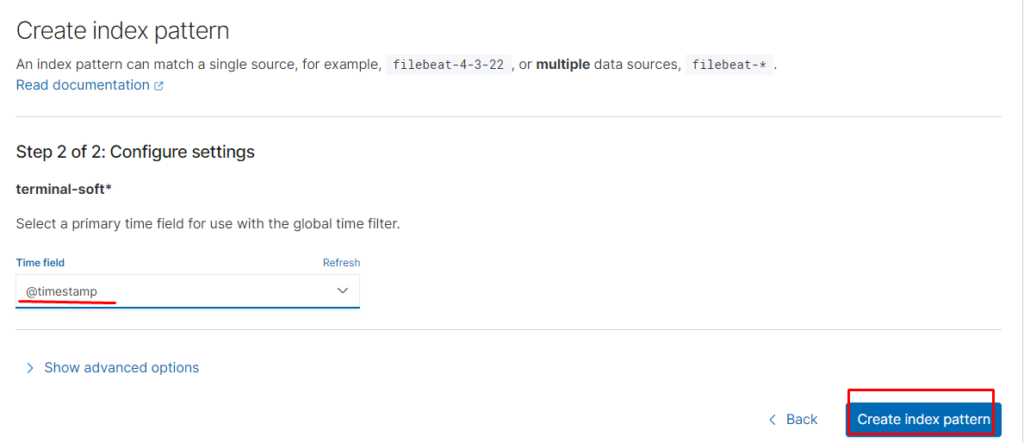

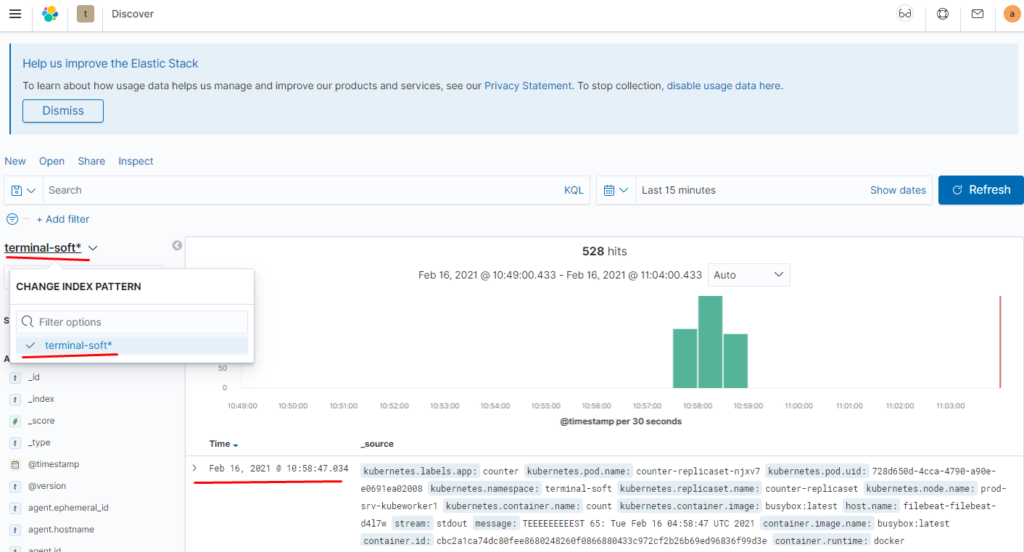

Создаём index pattern

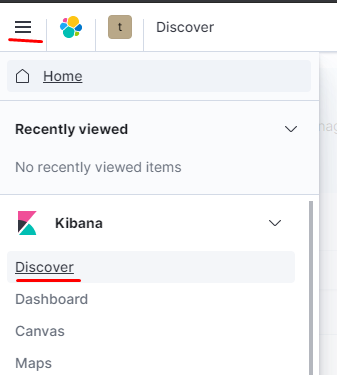

Проверяем:

как видим данные отображаются:

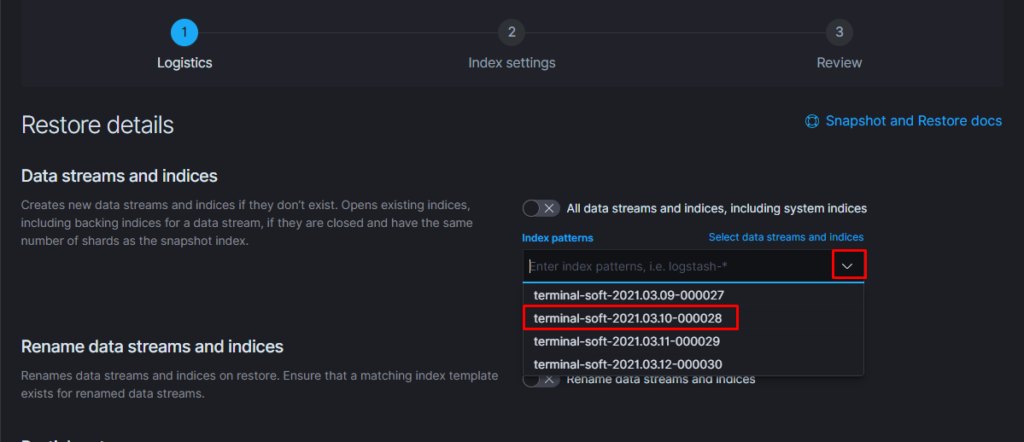

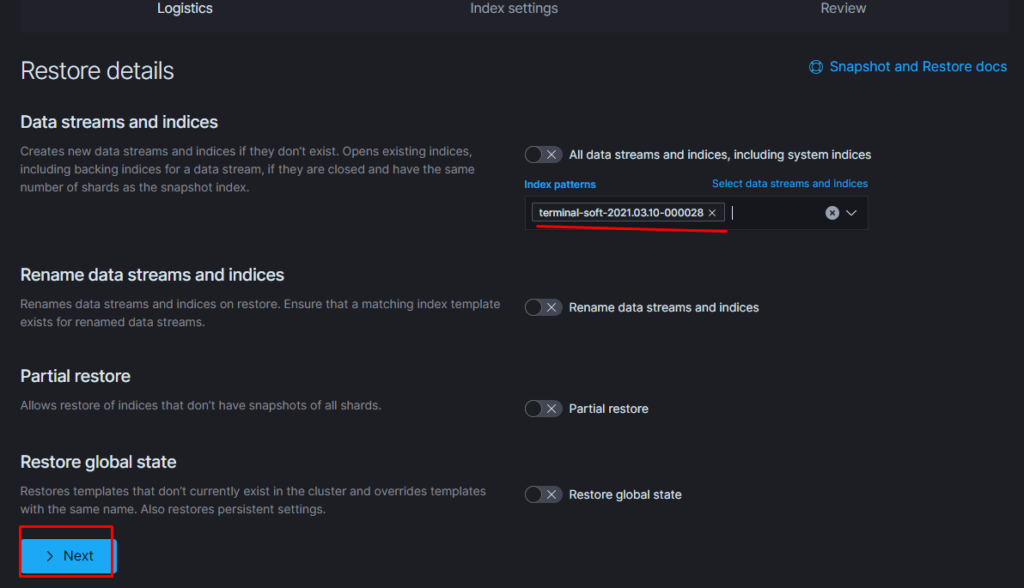

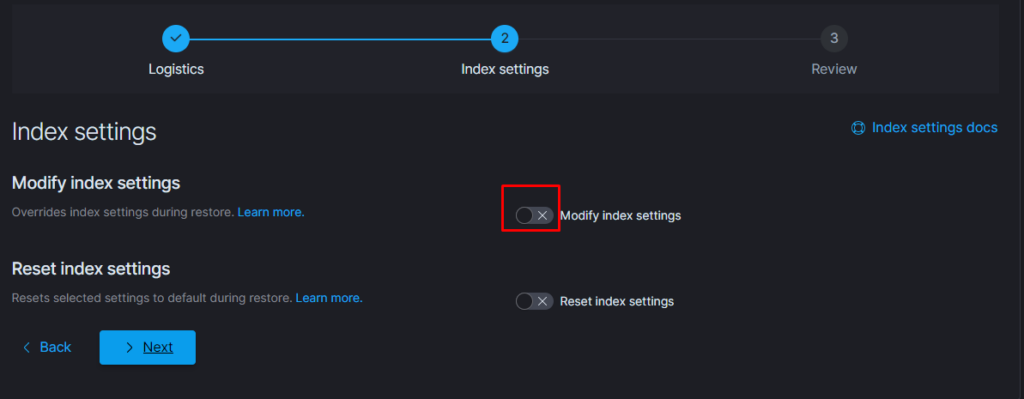

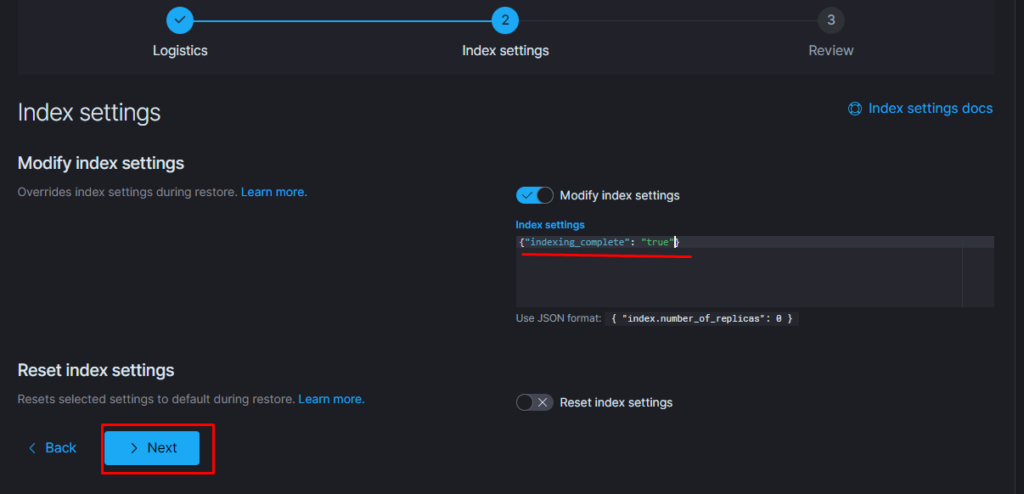

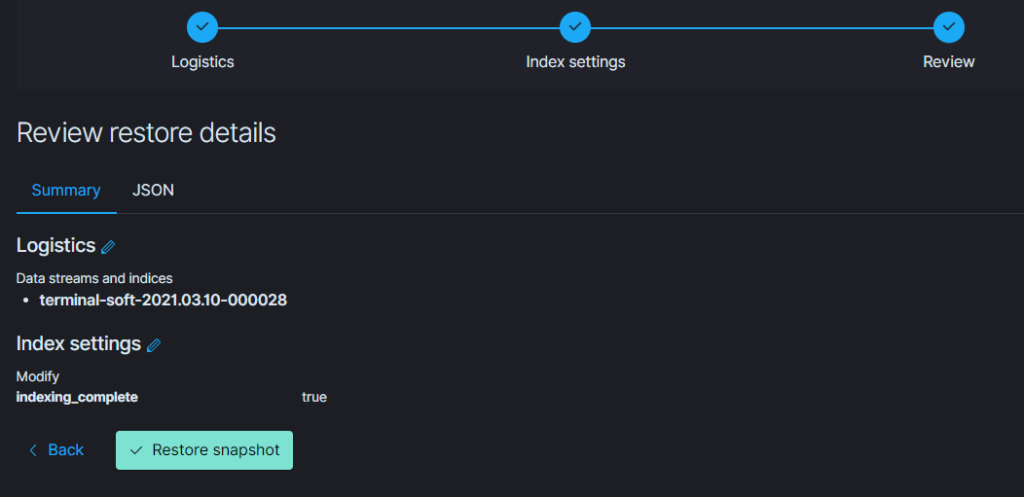

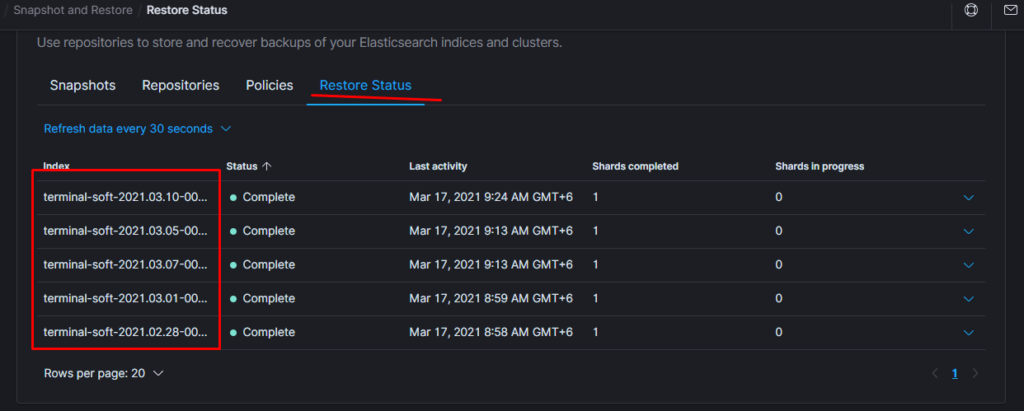

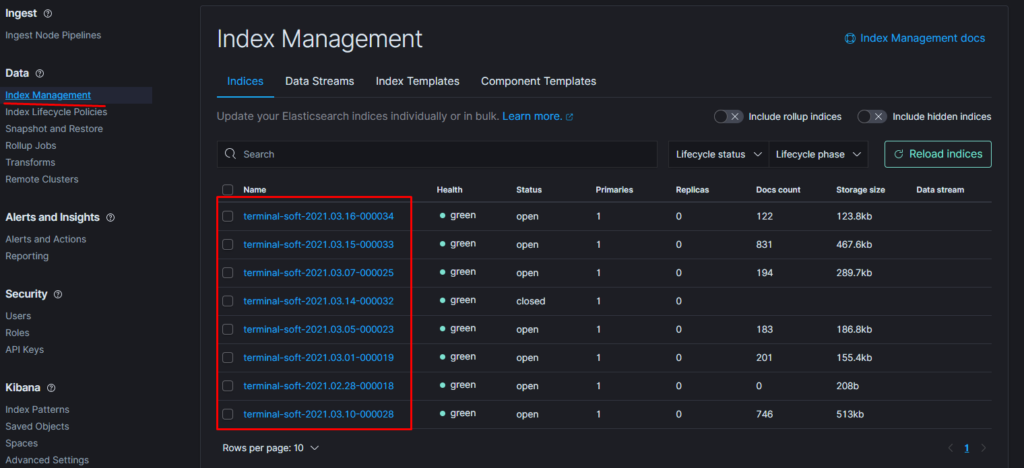

11.Восстановление из snapshot

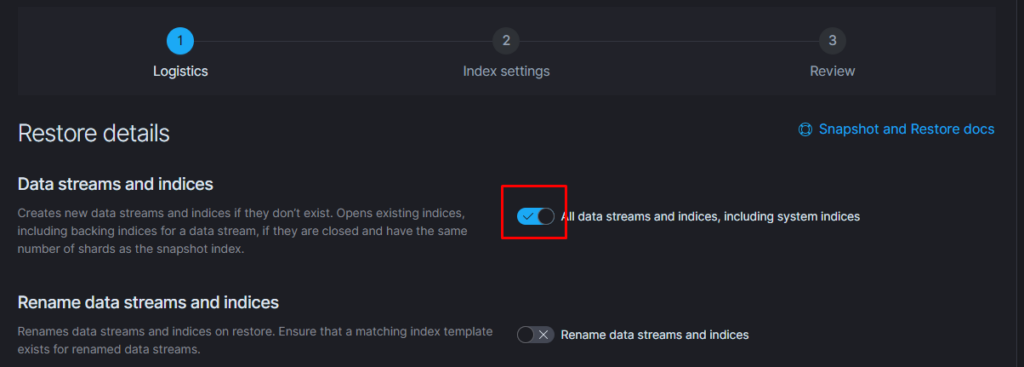

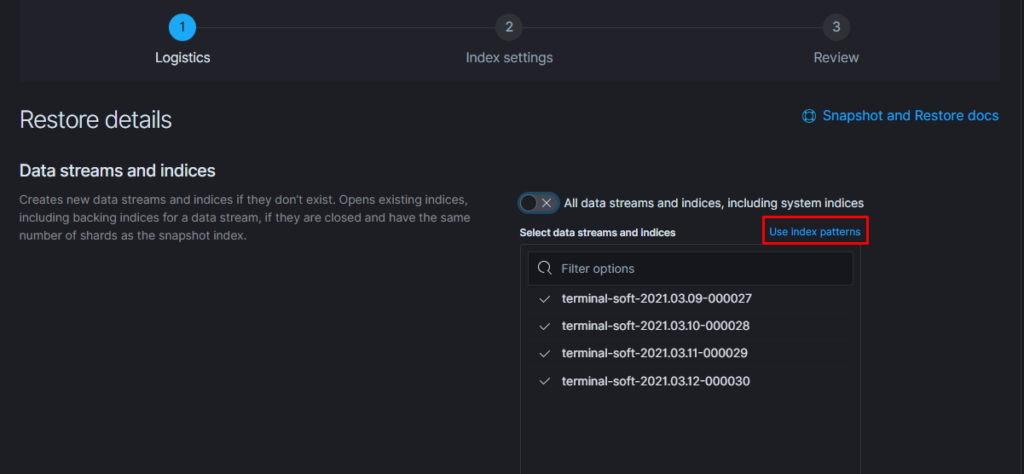

Если требуется восстановить индексы из snapshot то делаем следующее:

Тут надо добавить параметр

«indexing_complete»: «true»

только в том случае если при восстановлении возникает ошибка следующего вида:

Unable to restore snapshot

[illegal_state_exception] alias [terminal-soft] has more than one write index [terminal-soft-2021.03.04-000022,terminal-soft-2021.03.16-000034]

Поэтому когда производишь восстановление необходимо восстанавливать индекс из самого последнего снапшота.

т.е. Если нужен индекс за 10 число то снапшот смотрим где-то за 15 число. Ну или добавляем пераметр «indexing_complete»: «true»

Источник: https://sidmid.ru/kubernetes-установка-elk-из-helm-чарта-xpack-ilm/

Was this helpful?

0 / 0